The newly announced Meta Ray-Ban Display glasses, and the ‘Neural Band’ input device that comes with them, are still far from proper augmented reality. But Meta has made several clever design choices that will pay dividends once their true AR glasses are ready for the masses.

The Ray-Ban Display glasses are a new category for Meta. Previous products communicated to the user purely through audio. Now, a small, static monocular display adds quite a bit of functionality to the glasses. Check out the full announcement of the Meta Ray-Ban Display glasses here for all the details, and read on for my hands-on impressions of the device.

A Small Display is a Big Improvement

A 20° monocular display isn’t remotely sufficient for proper AR (where virtual content floats in the world around you), but it adds a lot of new functionality to Meta’s smart glasses.

For instance, imagine you want to ask Meta AI for a recipe for teriyaki chicken. On the non-display models, you could definitely ask the question and get a response. But after the AI reads it out to you, how do you continue to reference the recipe? Well, you could either keep asking the glasses over and-over, or you could pull your phone out of your pocket and use the Meta AI companion app (at which point, why not just pull the recipe up on your phone in the first place?).

Now with the Meta Ray-Ban Display glasses, you can actually see the recipe instructions as text in a small heads-up display, and glance at them whenever you need.

In the same way, almost everything you could previously do with the non-display Meta Ray-Ban glasses is enhanced by having a display.

Now you can see a whole thread of messages instead of just hearing one read through your ear. And when you reply you can actually read the input as it appears in real-time to make sure it’s correct instead of needing to simply hear it played back to you.

When capturing photos and videos you now see a real-time viewfinder to ensure you’re framing the scene exactly as you want it. Want to check your texts without needing to talk out loud to your glasses? Easy peasy.

And the real-time translation feature becomes more useful too. In current Meta glasses you have to listen to two overlapping audio streams at once. The first is the voice of the speaker and the second is the voice in your ear translating into your language, which can make it harder to focus on the translation. With the Ray-Ban Display glasses, now the translation can appear as a stream of text, which is much easier to process while listening to the person speaking in the background.

It should be noted that Meta has designed the screen in the Ray-Ban Display glasses to be off most of the time. The screen is set off and to the right of your central vision, making it more of a glanceable display than something that’s right in the middle of your field-of-view. At any time you can turn the display on or off with a double-tap of your thumb and middle finger.

Technically, the display is a 0.36MP (600 × 600) full-color LCoS display with a reflective waveguide. Even though the resolution is “low,” it’s plenty sharp across the small 20° field-of-view. Because it’s monocular, it does have a ghostly look to it (because only one eye can see it). This doesn’t hamper the functionality of the glasses, but aesthetically it’s not ideal.

Meta hasn’t said if they designed the waveguide in-house or are working with a partner. I suspect the latter, and if I had to guess, Lumus would be the likely supplier. Meta says the display can output up to 5,000 nits brightness, which is enough to make the display readily usable even in full daylight (the included Transitions also help).

From the outside, the waveguide is hardly visible in the lens. The most prominent feature is some small diagonal markings toward the temple-side of the headset.

Meanwhile, the final output gratings are very transparent. Even when the display is turned on, it’s nearly impossible to see a glint from the display in a normally lit room. Meta said the outward light-leakage is around 2%, which I am very impressed by.

Aside from the glasses being a little chonkier than normal glasses, the social acceptability here is very high—even more so because you don’t need to constantly talk to the glasses to use them, or even hold your hand up to tap the temple. Instead, the so-called Neural Band (based on EMG sensing), allows you to make subtle inputs while your hand is down at your side.

The Neural Band is an Essential Piece to the Input Puzzle

The included Neural Band is just as important to these new glasses as the display itself—and it’s clear that this will be equally important to future AR glasses.

To date, controlling XR devices has been done with controllers, hand-tracking, or voice input. All of these have their pros and cons, but none are particularly fitting for glasses that you’d wear around in public; controllers are too cumbersome, hand-tracking requires line of sight which means you need to hold your hands awkwardly out in front of you, and voice is problematic both for privacy and certain social settings where talking isn’t appropriate.

The Neural Band, on the other hand, feels like the perfect input device for all-day wearable glasses. Because it’s detecting muscle activity (instead of visually looking for your fingers) no line-of-sight is needed. You can have your arm completely to your side (or even behind your back) and you’ll still be able to control the content on the display.

The Neural Band offers several ways to navigate the UI of the Ray-Ban Display glasses. You can pinch your thumb and index finger together to ‘select’; pinch your thumb and middle finger to ‘go back’; and swipe your thumb across the side of your finger to make up, down, left, and right selections. There are a few other inputs too, like double-tapping fingers or pinching and rotating your hand.

As of now, you navigate the Ray-Ban Display glasses mostly by swiping around the interface and selecting. In the future, having eye-tracking on-board will make navigation even more seamless, by allowing you to simply look and pinch to select what you want. The look-and-pinch method, combined with eye-tracking, already works great on Vision Pro. But it still misses your pinches sometimes if your hand isn’t in the right spot, because the cameras can’t always see your hands at quite the right angle. If I could use the Neural Band for pinch detection on Vision Pro, I absolutely would—that’s how well it seems to work already.

While it’s easy enough to swipe and select your way around the Ray-Ban Display interface, the Neural Band has the same downside that all the aforementioned input methods have: text input. But maybe not for long.

In my hands-on with the Ray-Ban Display, the device was still limited to dictation input. So replying to a message or searching for a point of interest still means talking out loud to the headset.

However, Meta showed me a demo (that I didn’t get to try myself) of being able to ‘write’ using your finger against a surface like a table or your leg. It’s not going to be nearly as fast as a keyboard (or dictation, for that matter), but private text input is an important feature. After all, if you’re out in public, you probably don’t want to be speaking all of your message replies out loud.

The ‘writing’ input method is said to be a forthcoming feature, though I didn’t catch whether they expected it to be available at launch or sometime after.

On the whole, the Neural Band seems like a real win for Meta. Not just for making the Ray-Ban display more useful, but it seems like the ideal input method for future glasses with full input capabilities.

And it’s easy to see a future where the Neural Band becomes even more useful by evolving to include smartwatch and fitness tracking functions. I already wear a smartwatch most of the day anyway… making it my input device for a pair of smart glasses (or AR glasses in the future) is a smart approach.

Little Details Add Up

One thing I was not expecting to be impressed by was the charging case of the Ray-Ban Display glasses. Compared to the bulky charging cases of all of Meta’s other smart glasses, this clever origami-like case folds down flat to take up less space when you aren’t using it. It goes from being big enough to accommodate a charging battery and the glasses themselves, down to something that can easily go in a back pocket or slide into a small pocket in a bag.

This might not seem directly relevant to augmented reality, but it’s actually more important than you might think. It’s not like Meta invented a folding glasses case, but it shows that the company is really thinking about how this kind of device will fit into people’s lives. An analog to this for their MR headsets would be including a charging dock with every headset—something they’ve yet to do.

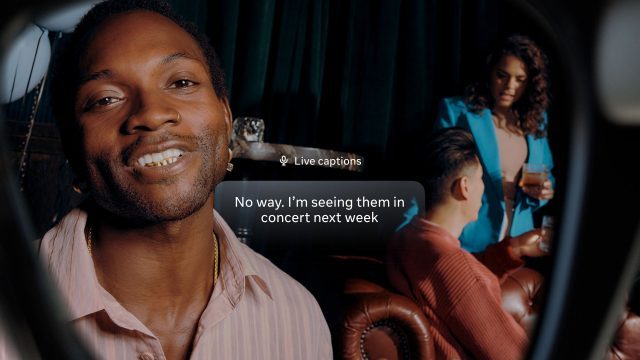

Now with a display on-board, Meta is also repurposing the real-time translation feature as a sort of ‘closed captioning’. Instead of translating to another language, you can turn on the feature and see a real-time text stream of the person in front of you, even if they’re already speaking your native language. That’s an awesome capability for those that are hard-of-hearing.

And even for those that aren’t, you might still find it useful… Meta says the beam-forming microphones in the Ray-Ban Display can focus on the person you’re looking at while ignoring other nearby voices. They showed me a demo of this in action in a room with one person speaking to me and three others having a conversation nearby to my left. It worked relatively well, but it remains to be seen if it will work in louder environments like a noisy restaurant or a club with thumping music.

Meta wants to eventually pack full AR capabilities into glasses of a similar size. And even if they aren’t there yet, getting something out the door like the Ray-Ban Display gives them the opportunity to explore, iterate—and hopefully perfect—many of the key ‘lifestyle’ factors that need to be in place for AR glasses to really take off.

Disclosure: Meta covered lodging for one Road to VR correspondent to attend an event where information for this article was gathered.