Independent tech analyst Ming-Chi Kuo reports that Apple’s XR and smart glasses roadmap will feature multiple XR devices, including a spec-bumped Vision Pro slated to release later this year. At the far end of the spectrum, Kuo also says Apple is making AR glasses, reportedly coming in 2028.

Kuo is a long-time tech analyst and respected figure in Apple product leaks. In a new blogpost, Kuo has laid out a timeline for a number of Apple XR releases, ostensibly based on various supply chain leaks.

Kuo reports that a new Vision Pro featuring the company’s M5 chip is scheduled for mass production in Q3 2025, with 150,000–200,000 units expected to ship. This next Vision Pro is reportedly upgrading the chip from M2 to M5, but will otherwise retain the original specs.

Kuo maintains the iterative approach is based on Vision Pro’s current position as a niche product, as the company hopes to use the next version to maintain market presence, reduce component stock, and further refine XR applications.

Next, Kuo says Apple is preparing a much lighter headset, reportedly called ‘Vision Air’, set to release in Q3 2027. Vision Air is said to be dramatically lighter at over 40% less than the current Vision Pro, which weighs in at 21.2–22.9 ounces (600–650 g), excluding the battery.

Kuo maintains Vision Air will include plastic lenses, magnesium alloys, fewer sensors, a top-tier iPhone chip, and be priced significantly lower to appeal to broader users.

The true next-gen Vision device is said to be ‘Vision Pro 2’ though, which Kuo says could arrive in 2H 2028, replete with a full redesign, Mac-grade chip, reduced weight, and a lower price, signaling Apple’s shift to move away from niche XR products and move toward mainstream adoption.

Meanwhile, Kuo says Apple is investing heavily in smart glasses.

A Ray-Ban-like, audio-first wearable is expected to arrive in Q2 2027, Kuo says, noting that the company is hoping to manufacture 3–5 million units—ostensibly a significant push towards making its first smart glasses a mainstream success.

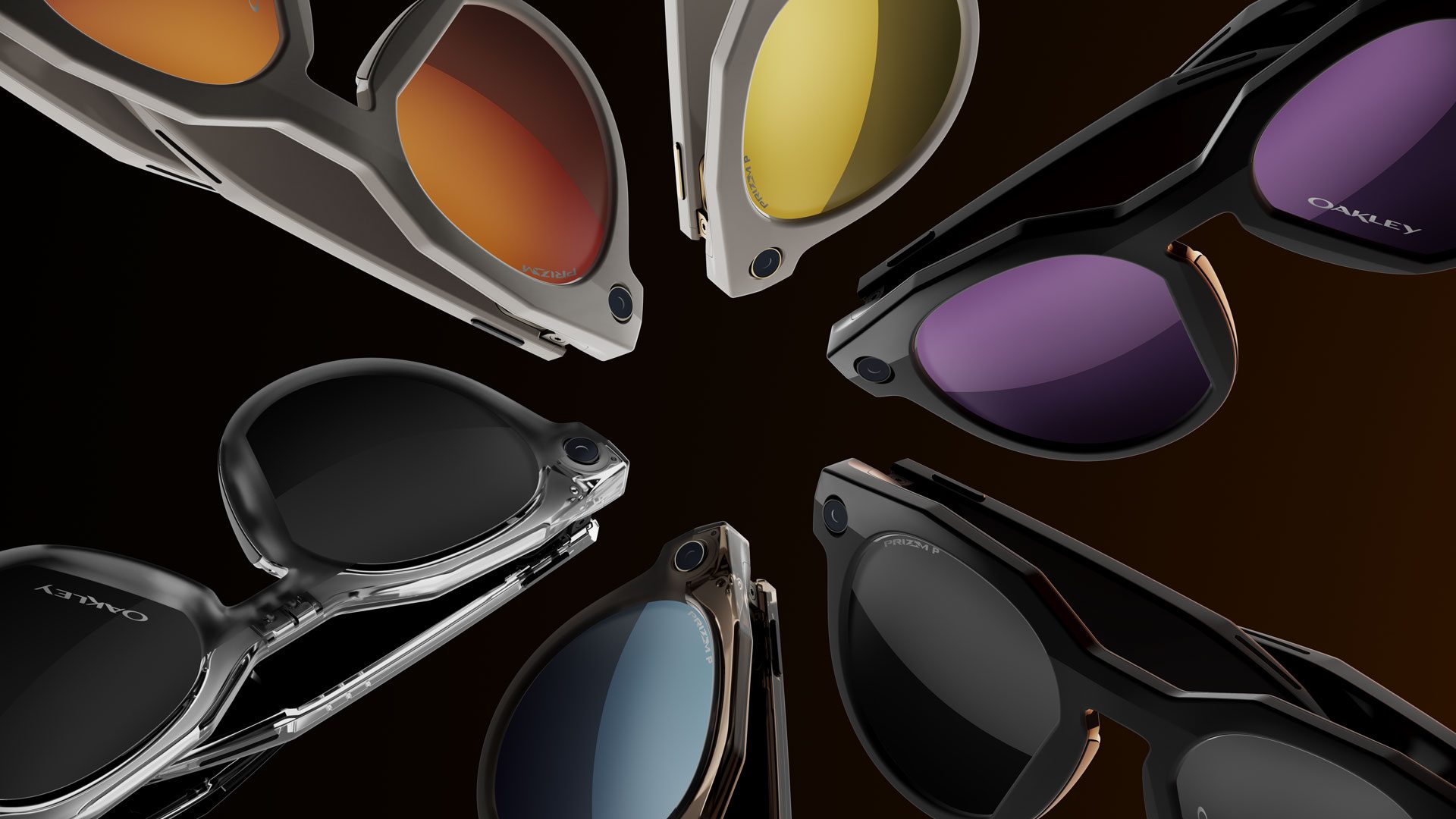

Like Ray-Ban Meta, and the recently unveiled Oakley Meta HSTN, Apple’s smart glasses are said to have no display, rather offering audio playback, photo and video capture, an AI assistant, and both gesture and voice controls.

Arguably the biggest claim among Kuo’s timeline is the mention of Apple XR Glasses, which the analyst maintains will include a color display (LCoS + waveguide) and AI features, making it the first true AR glasses from the company. Kuo says Apple is targeting release in 2H 2028, with a lighter variant being developed in parallel.

This follows reports of Apple potentially scraping a more casual XR glasses-type viewer, which would be tethered to Apple devices and use birdbath optics. The device was originally planned for release in Q2 2026, but Kuo maintains it was paused in late 2024 due to insufficient differentiation, especially around weight.

Granted, Apple is one of the most opaque black boxes in tech for a reason. The company historically announces products on stage, which typically also comes with a price and release date attached. While Kuo has a fairly reliable track record of reporting insider knowledge of Vision Pro, we’re taking this data dump with an equally-sized grain of salt.