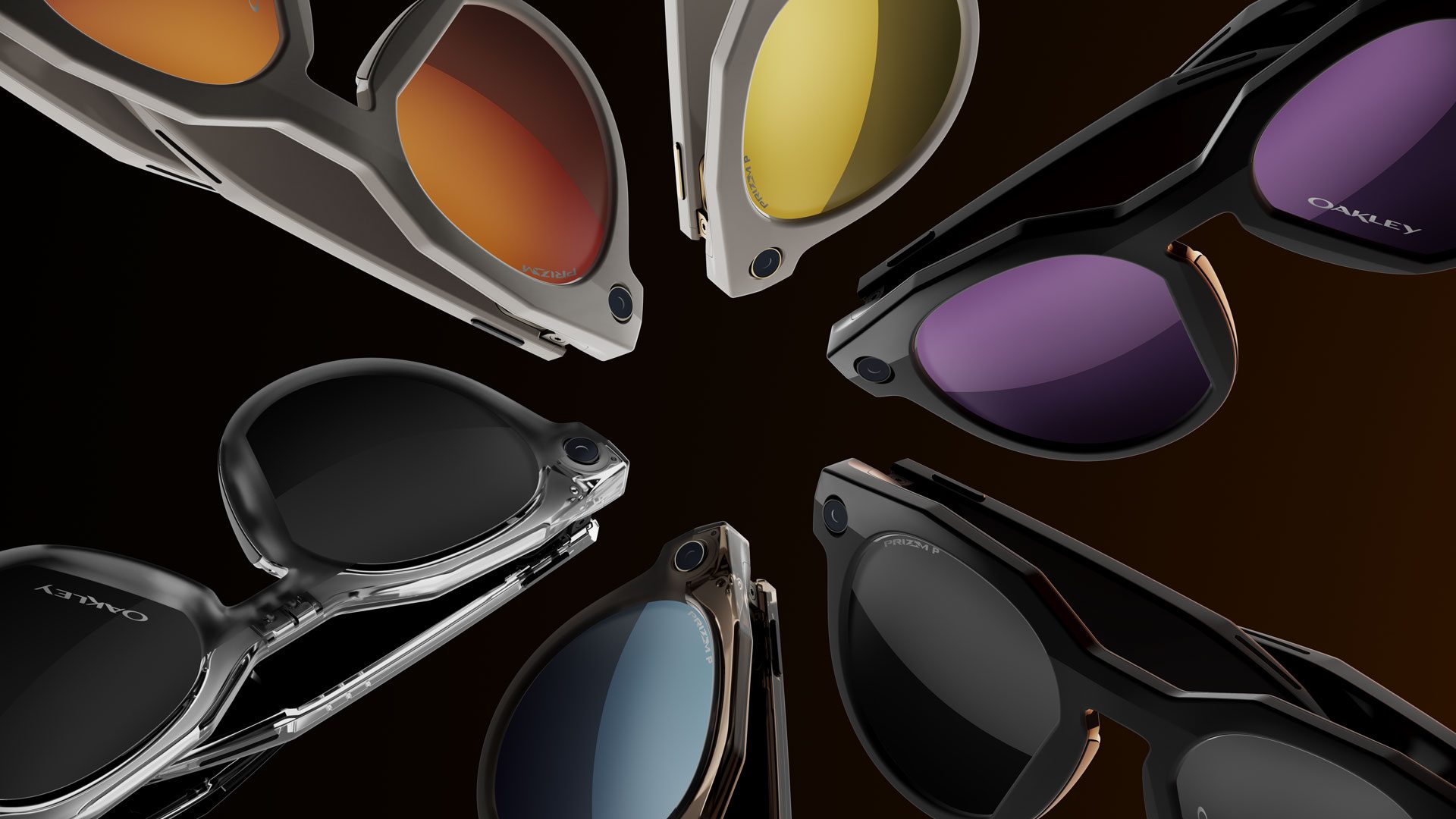

Meta today revealed its next smart glasses built in collaboration with EssilorLuxottica— Oakley Meta Glasses.

As a part of the extended collaboration, Meta and EssilorLuxottica today unveiled Oakley Meta HSTN (pronounced HOW-stuhn), the companies’ next smart glasses following the release of Ray-Ban Meta in 2023.

Pre-orders are slated to arrive on July 11th for its debut version, priced at $499: the Limited Edition Oakley Meta HSTN, which features gold accents and 24K PRIZM polarized lenses.

Meanwhile, the rest of the collection will be available “later this summer,” Meta says, which start at $399, and will include the following six frame and lens color combos:

- Oakley Meta HSTN Desert with PRIZM Ruby Lenses

- Oakley Meta HSTN Black with PRIZM Polar Black Lenses

- Oakley Meta HSTN Shiny Brown with PRIZM Polar Deep-Water Lenses

- Oakley Meta HSTN Black with Transitions Amethyst Lenses

- Oakley Meta HSTN Clear with Transitions Grey Lenses

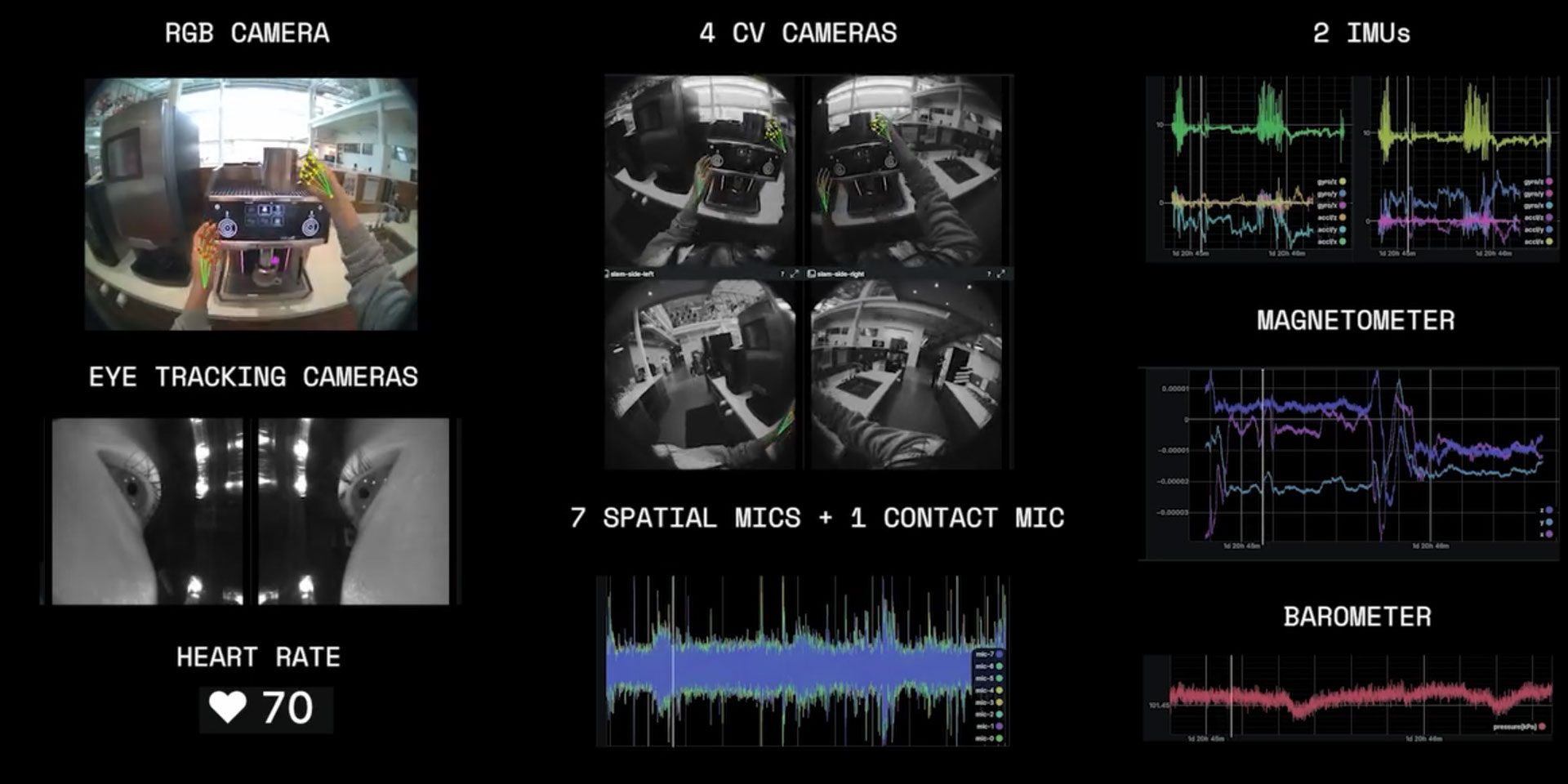

It’s not just a style change though, as the next-gen promises better battery life and higher resolution video capture over Ray-Ban Meta.

In comparison to Ray-Ban Meta glasses, the new Oakley Meta HSTN are said offer up to “3K video” from the device’s ultra-wide 12MP camera. Ray-Ban’s current second-gen glasses are capped at 1,376 × 1,824 pixels at 30 fps from its 12MP sensor, with both glasses offering up to three minutes of video capture.

What’s more, Oakley Meta HSTN is said to allow for up to eight hours of “typical use” and up to 19 hours on standby mode, effectively representing a doubling of battery life over Ray-Ban Meta.

And like Ray-Ban Meta, Oakley Meta HSTN come with a charging case, which also bumps battery life from Ray-Ban Meta’s estimated 32 hours to 48 hours of extended battery life on Oakley Meta HSTN.

It also pack in five mics for doing things like taking calls and talking to Meta AI, as well as off-ear speakers for listening to music while on the go.

Notably, Oakley Meta glasses are said to be water-resistant up to an IPX4 rating—meaning its can take splashes, rain, and sweat, but not submersion or extended exposure to water or other liquids.

The companies say Oakley Meta HSTN will be available across a number of regions, including the US, Canada, UK, Ireland, France, Italy, Spain, Austria, Belgium, Australia, Germany, Sweden, Norway, Finland, and Denmark. The device is also expected to arrive in Mexico, India, and the United Arab Emirates later this year.

In the meantime, you can sign up for pre-order updates either through Meta or Oakley for more information.