Today at Xiaomi’s ‘Human x Car x Home’ event, the Chinese tech giant revealed its answer to Meta and EssilorLuxottica’s series of smart glasses: Xiaomi AI Glasses.

Reports from late last year alleged Xiaomi was partnering with China-based ODM Goertek to produce a new generation of AI-assisted smart glasses, which was rumored to “fully benchmark” against Ray-Ban Meta—notably not officially available in China.

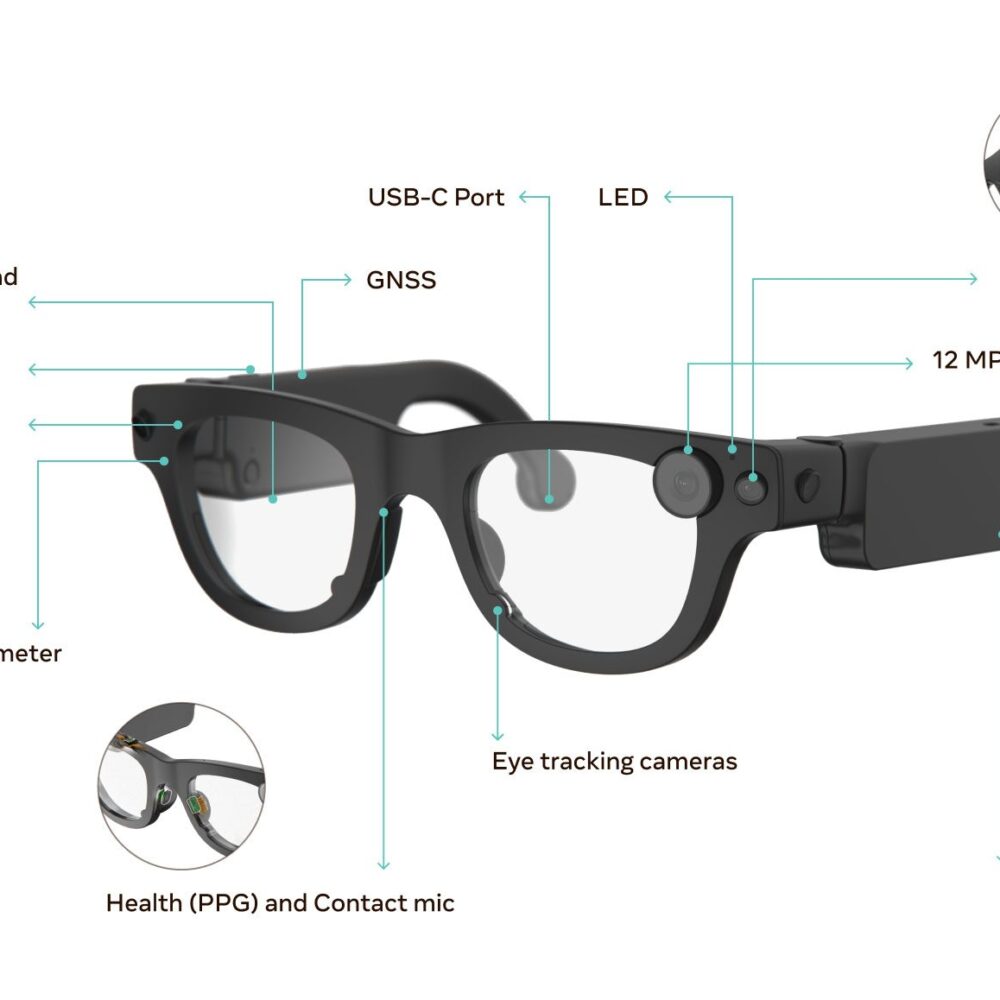

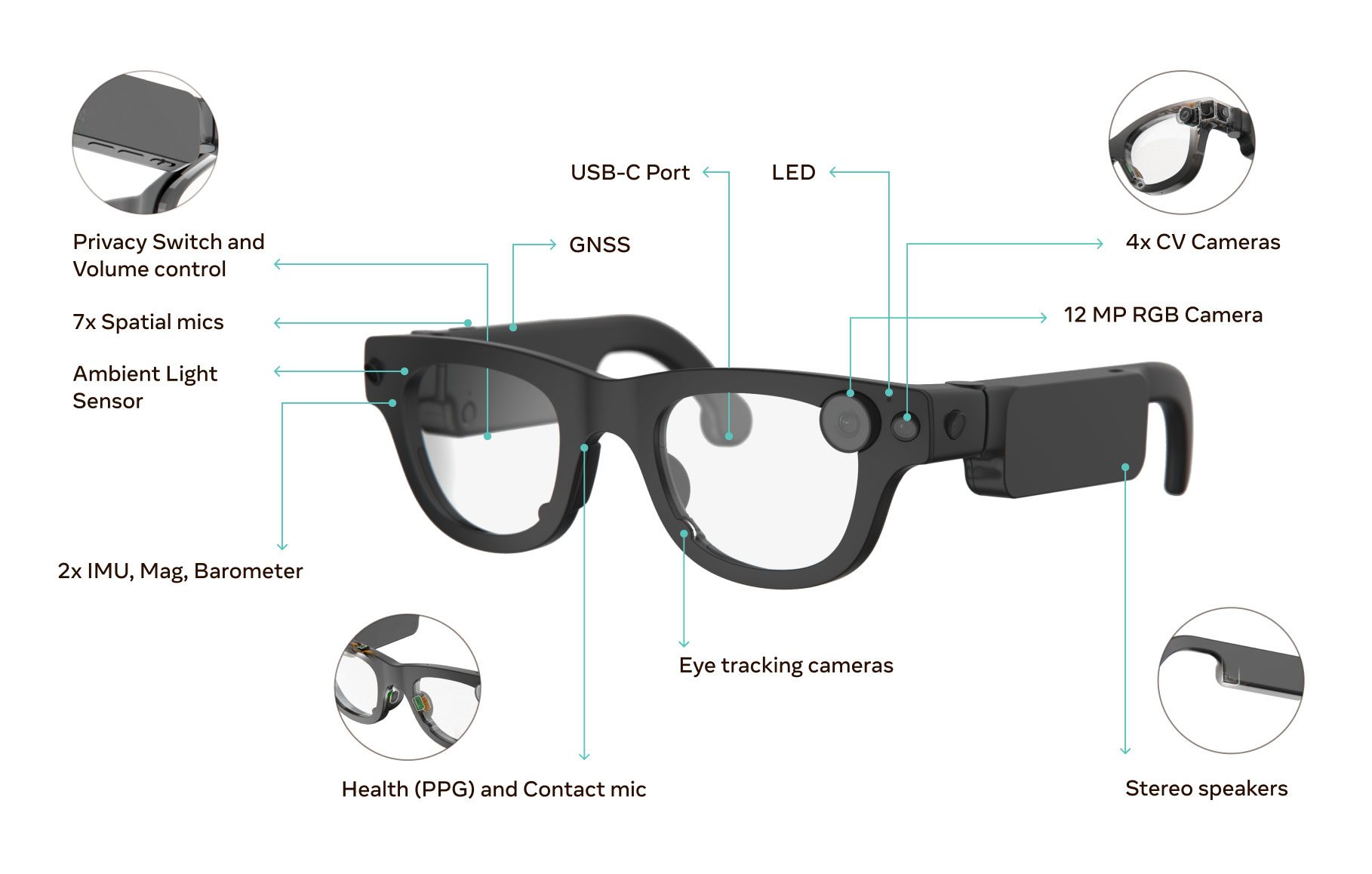

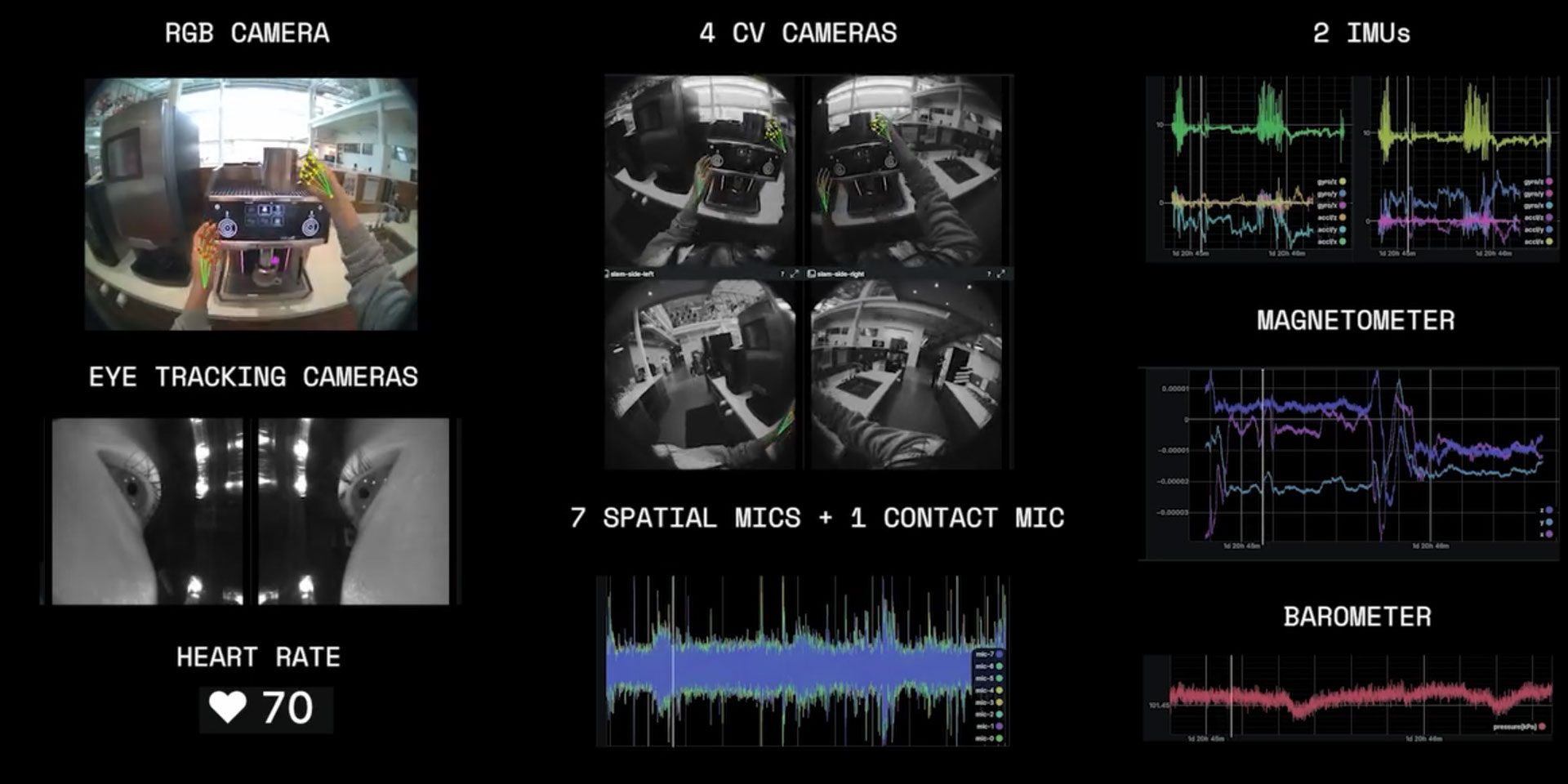

Now, Xiaomi has unveiled its first Xiaomi AI Glasses, which include onboard Hyper XiaoAi voice assistant, 12MP camera with electronic image stabilization (EIS), five mics, two speakers—all of it driven by a low-power BES2700 Bluetooth audio chip and Qualcomm’s Snapdragon AR1. So far, that’s pretty toe-to-toe with Ray-Ban Meta and the recently unveiled Oakley Meta HSTN glasses.

And like Meta’s smart glasses, Xiaomi AI Glasses don’t include displays of any kind, instead relying on voice and touch input to interact with Hyper XiaoAI. It also boasts foreign language text translation in addition to photo and video capture, which can be toggled either with a voice prompt or tap of the frames.

Xiaomi rates the glasses’ 263mAh silicon-carbon battery at 8.6 hours of use, which the company says can include things like 15 one-minute video recordings, 50 photo shots, 90 minutes of Bluetooth calls, or 20 minutes of XiaoAi voice conversations. Those are just mixed use estimates though, as Xiaomi says it can last up to 21 hours in standby mode, 7 hours of music listening, and 45 minutes of continuous video capture.

One of the most interesting native features though is the ability to simply look at an Alipay QR code, which are ubiquitous across the country, and pay for goods and services with a vocal prompt. Xiaomi says the feature is expected to arrive as an OTA update in September 2025.

The device is set to launch today in China, although global availability is still in question at this time. Xiaomi says the glasses were “optimized for Asian face shapes,” which may rule out a broader global launch for this particular version.

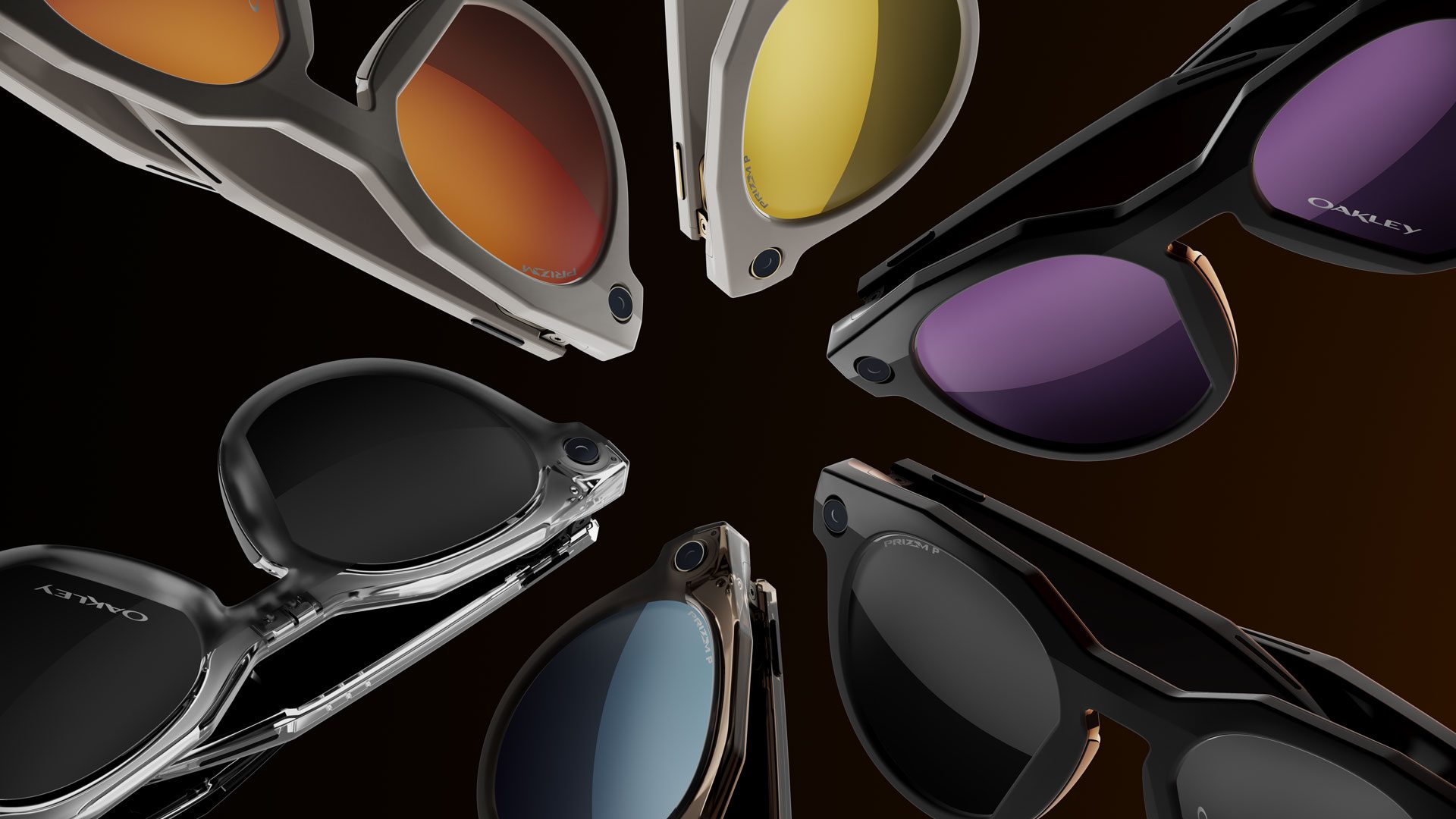

While there’s only a singular frame shape to choose from, it will be offered in three colors—black, and semi-transparent tortoiseshell brown and parrot green—in addition to three lens options, which aim to beat Ray-Ban Meta and Oakley Meta in cool factor.

The base model with clear lenses is priced at ¥1,999 RMB (~$280 USD), while customers can also choose electrochromic shaded lenses at ¥2,699 RMB (~$380 USD) and colored electrochromic shaded lenses at ¥2,999 RMB (~$420 USD).

Xiaomi’s electrochromic lenses allow for gradual shading depending on the user’s comfort, letting you change the intensity of shading by touching the right frame. Notably, the company says its base model can optionally include prescription lenses through its online and offline partners.

This makes Xiaomi AI Glasses the company’s first mass-produced smart glasses with cameras marketed under the Xiaomi brand.

Many of Xiaomi’s earlier glasses—such as the Mijia Smart Audio Glasses 2—were only sold in China and lacked camera sensors entirely, save the limited release device Mijia Glasses Camera from 2022, which featured a 50 MP primary and 8 MP periscope camera, and micro-OLED heads-up display.

Here are the specs we’ve gathered so far from Xiaomi’s presentation. We’ll be filling in more as information comes in:

| Camera | 12MP |

| Lens | ƒ/2.2 large aperture | 105° angle lens |

| Photo & Video capture | 4,032 x 3,024 photos | 2K/30FPS video recording | EIS video stabilization |

| Video length | 45 minute continuous recording cap |

| Weight | 40 g |

| Charging | USB Type-C |

| Charging time | 45 minutes |

| Battery | 263mAh silicon-carbon battery |

| Battery life | 8.6 hours of mixed use |

| Audio | two frame-mounted speakers |

| Mics | 4 mics + 1 bone conduction mic |

| Design | Foldable design |

–

According to Chinese language outlet Vrtuoluo, the device has already seen strong initial interest on e-commerce platform JD.com, totaling over 25,000 reservations made as of 9:30 AM CST (local time here).