Bigscreen Beyond 2 orders flooded in last Thursday at a surprising pace. Now, the PC VR headset maker notes that its next slim and light headset outsold the original in 24 hours by an impressive margin, making its first day or sales equivalent to six months of what it did with Beyond 1.

We’ve already heard some impressive stats following Bigscreen’s launch for Beyond 2 orders. In 25 minutes, Beyond 2 outsold the first day of Beyond 1 sales. In the first hour, they doubled Beyond 1 launch day sales. Within 10 hours of launching orders, Beyond 2 sold more than the first four months of Beyond 1 sales.

In an X post on Friday, Bigscreen founder and CEO Darshan Shankar revealed the most impressive sales stat yet:

“In the first 24 hours, Beyond 2 has sold 10 TIMES as many Beyond 1s sold on its launch day 2 years ago. In the first 24 hours, Beyond 2 has sold as much as Beyond 1 did in its first 6 months of sales. That’s exceptional.”

Shankar says the company did this with zero ad spend, noting “[w]e didn’t pay influencers to pump our product. We didn’t pay an agency for an expensive video. No advertising.”

The company did however send a few early Beyond 2 units to reviewers, which Shankar says was “[l]ike 10 units,” which, among others, included Tested, Thrillseeker, MRTV and VR Flight Sim Guy.

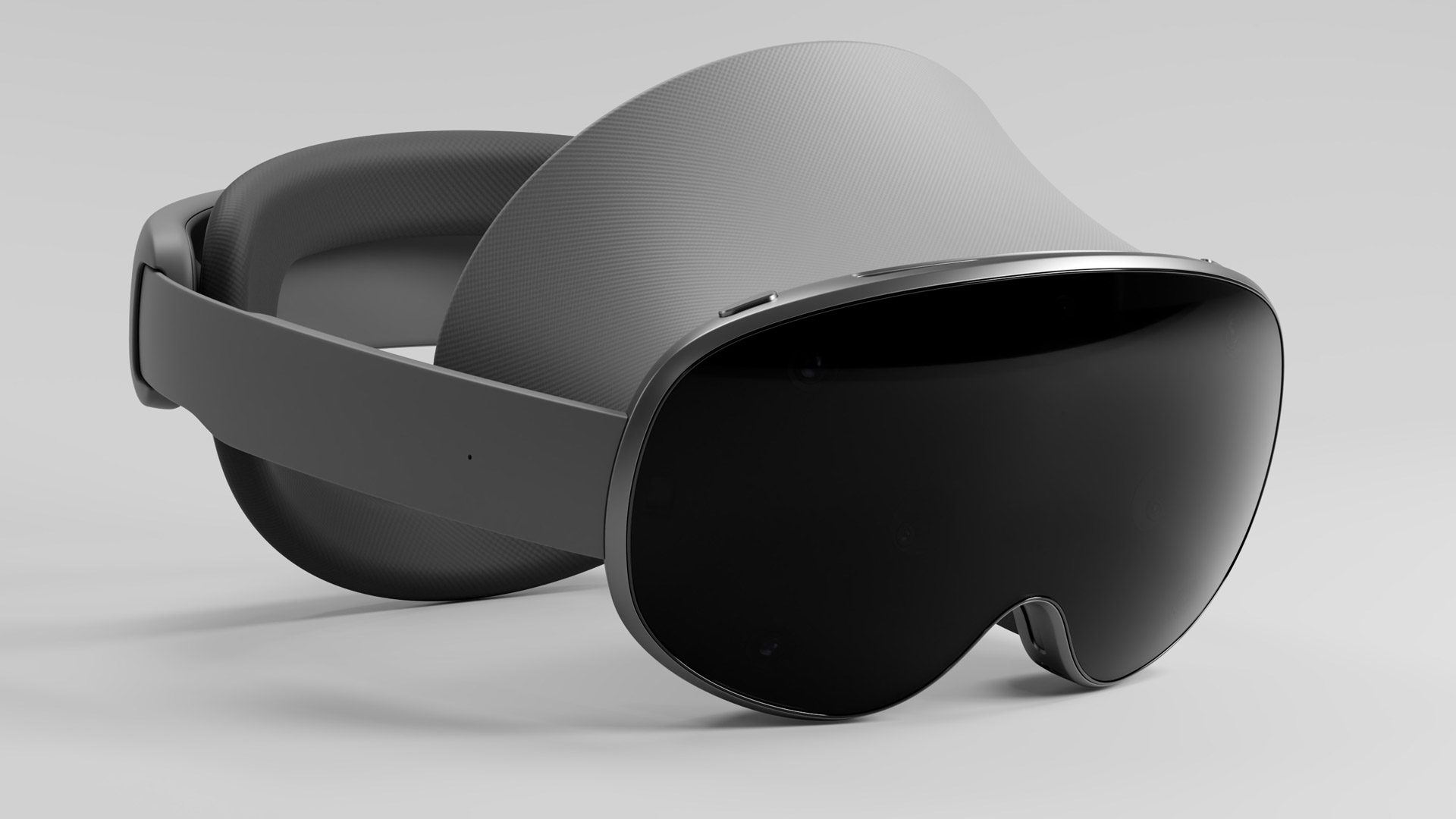

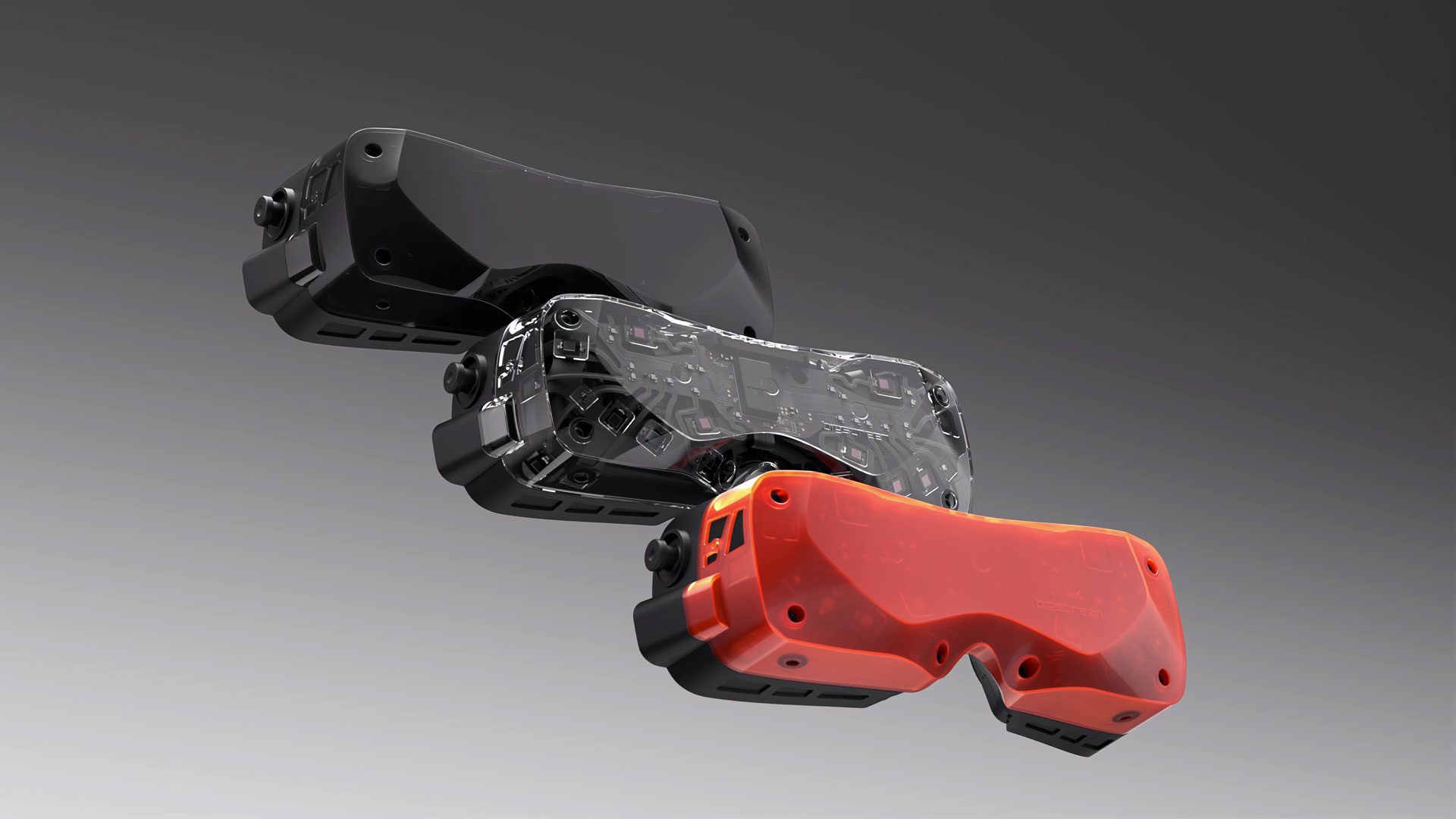

In case you missed the news—you check out the specs, price and launch schedule here—Beyond 2 comes in two flavors, one with eye-tracking (Beyond 2e) and one without (Beyond 2), priced at $1,019 and $1,219 respectively.

While it’s packing in the same dual 1-inch 2,560 × 2,560 micro-OLED displays as the original Beyond, the biggest improvement overall is the headset’s larger field-of-view (FOV) and better clarity thanks to the inclusion of a new pancake lens design. This bumps Beyond 2 to a 116-degree diagonal FOV over the original’s 102-degree diagonal FOV, and also includes an adjustable IPD mechanism in a lighter 107g design.

Although the first batches were quoted to ship in April (Beyond 2) and May (Beyond 2e), at the time of this writing new orders of Beyond 2 and Beyond 2e are quoted to ship in June.