iFixit got their hands on a pair of Meta Ray-Ban Display smart glasses, so we finally get to see what’s inside. Is it repairable? Not really. But if you can somehow find replacement parts, you could at least potentially swap out the battery.

The News

Meta launched the $800 smart glasses in the US late last month, marking the company’s first pair with a heads-up display.

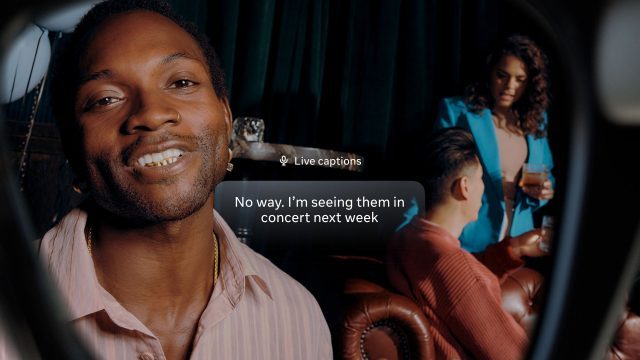

Serving up a monocular display, Meta Ray-Ban allows for basic app interaction beyond the standard stuff seen (or rather ‘heard’) in its audio-only Ray-Ban Meta and Oakley Meta glasses. It can do things like let you view and respond to messages, get turn-by-turn walking directions, and even use the display as a viewfinder for photos and video.

And iFixit shows off in their latest video that cracking into the glasses and attempting repairs is pretty fiddly, but not entirely impossible.

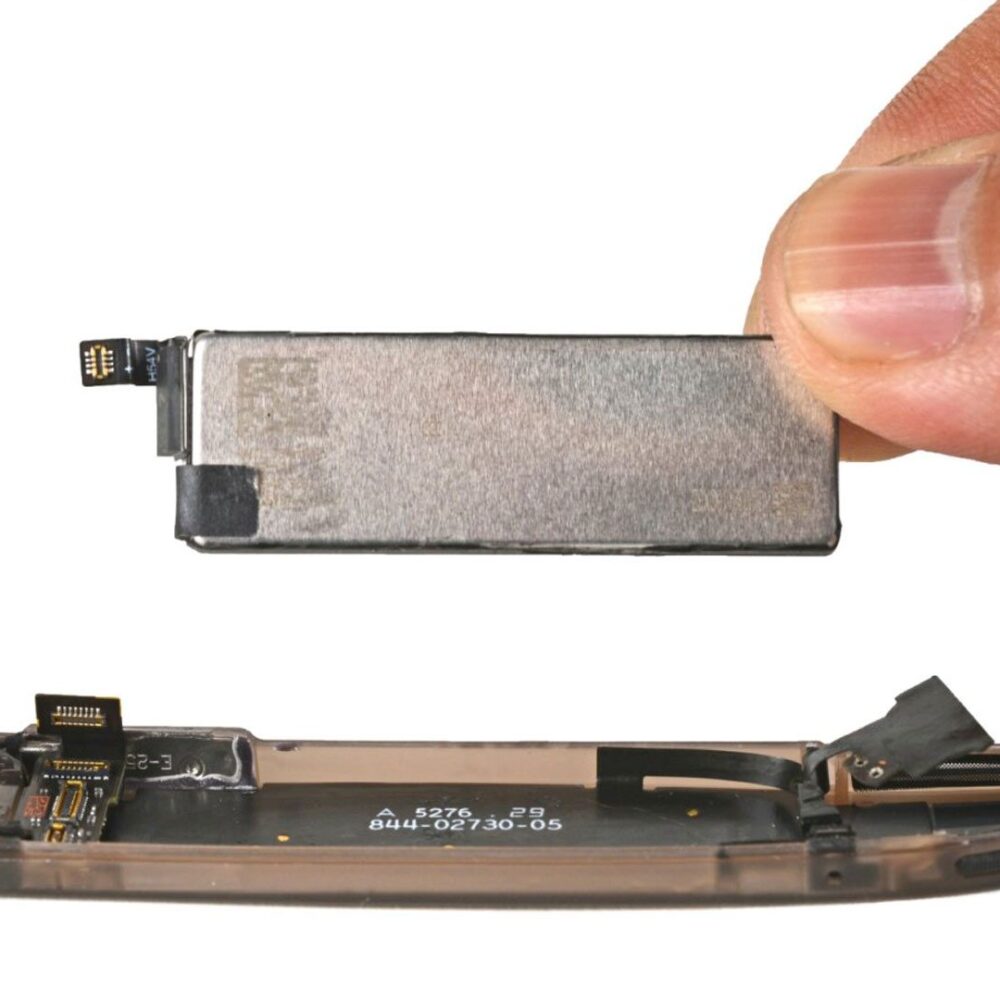

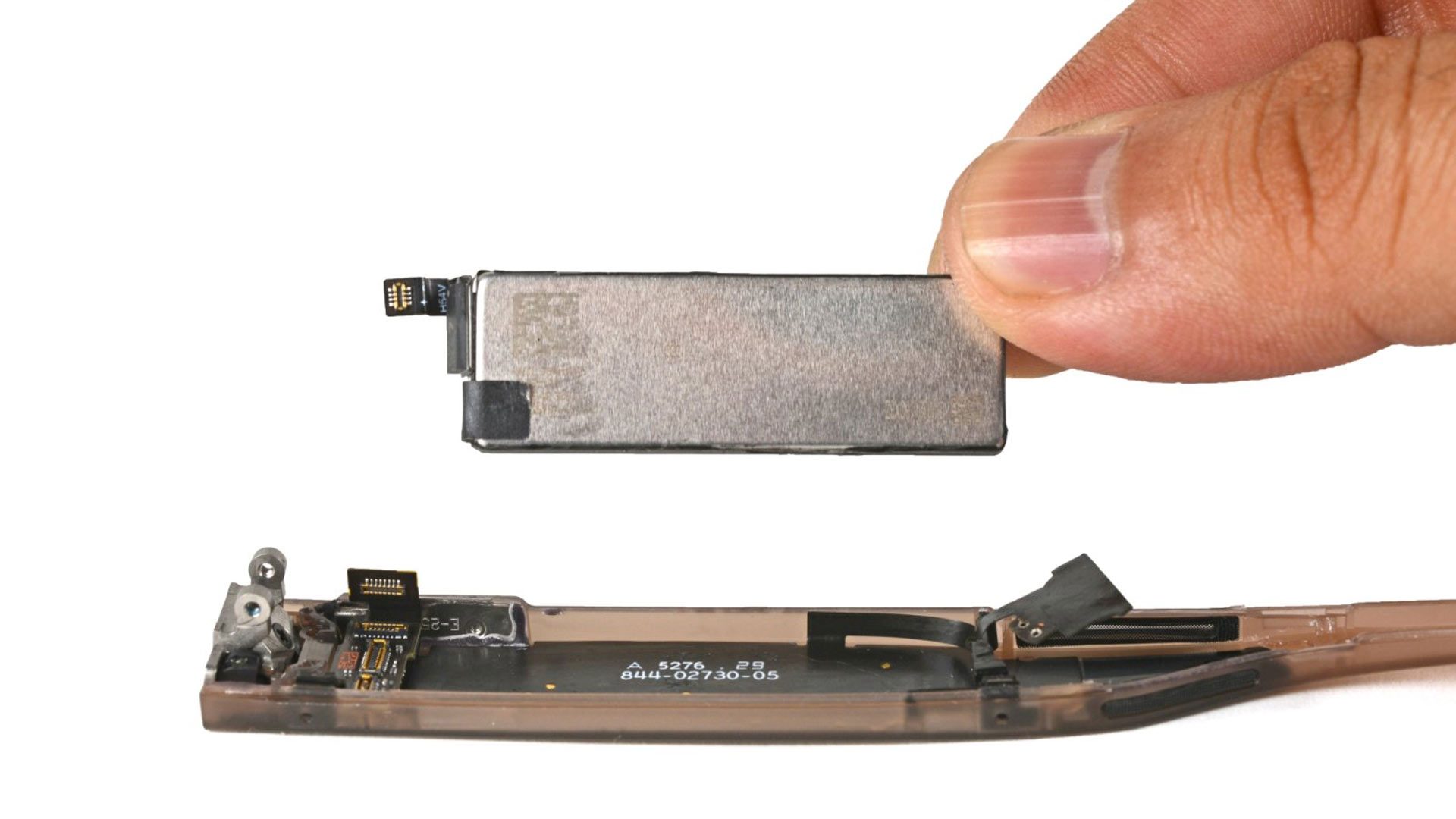

The first thing you’d probably eventually want to do is replace the battery, which requires splitting the right arm down a glued seam—a common theme with the entire device. Getting to the 960 mWh internal battery, which is slightly larger than the one seen in the Oakley Meta HSTN, you’ll be sacrificing the device’s IPX4 splash resistance rating.

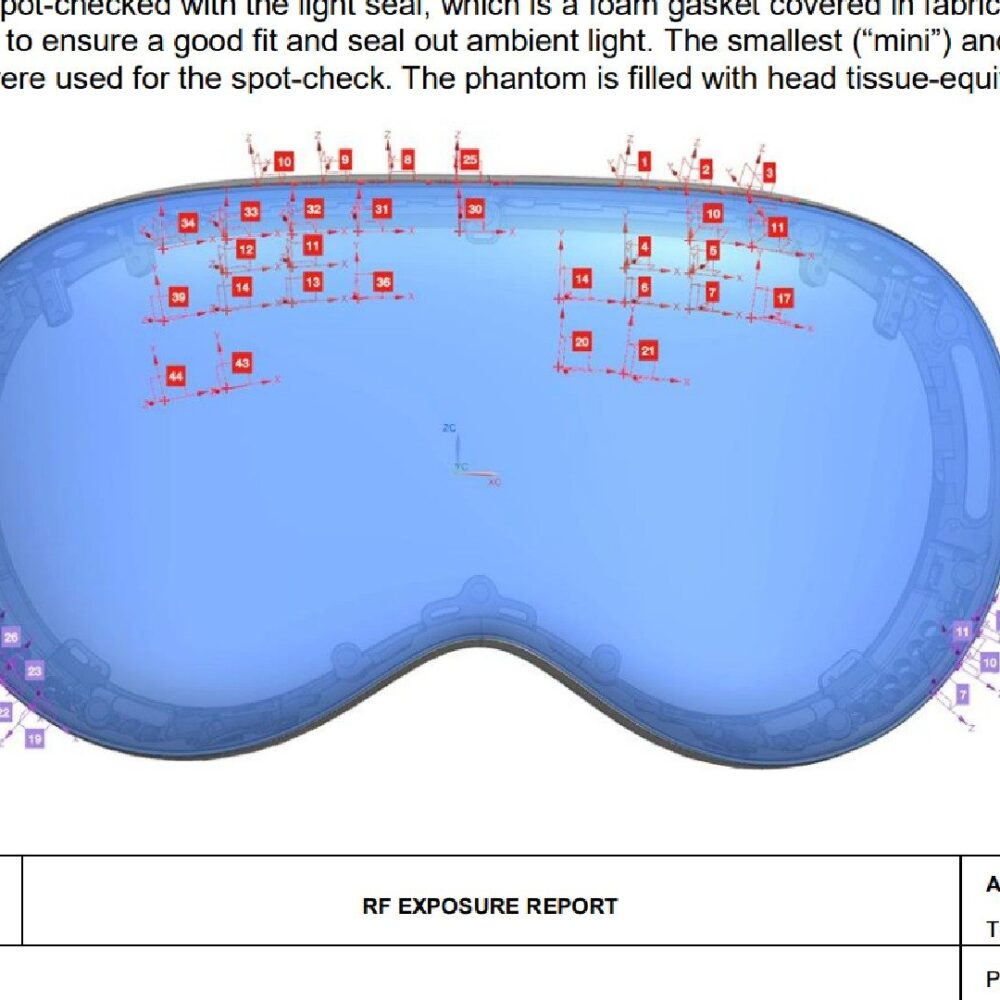

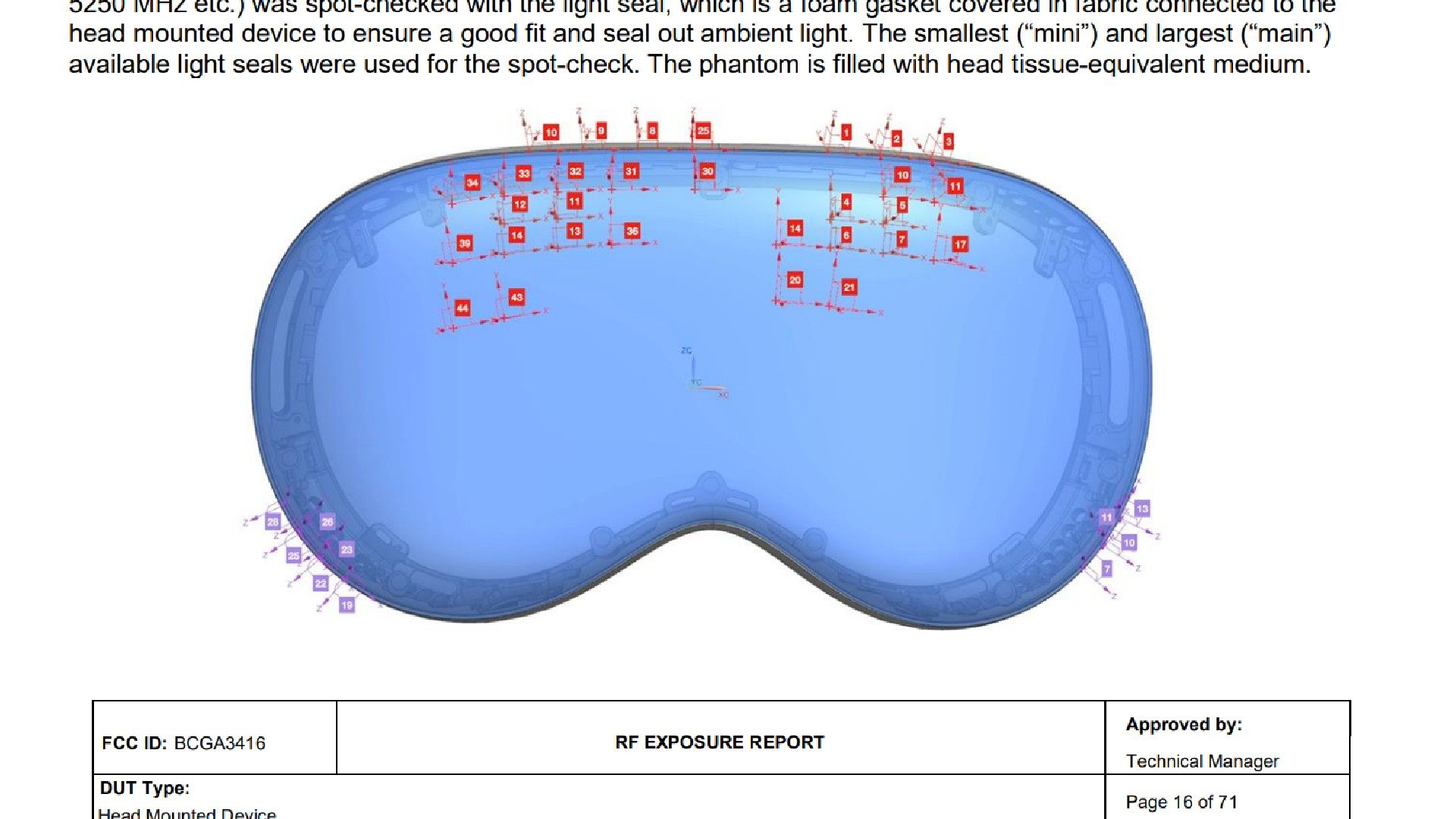

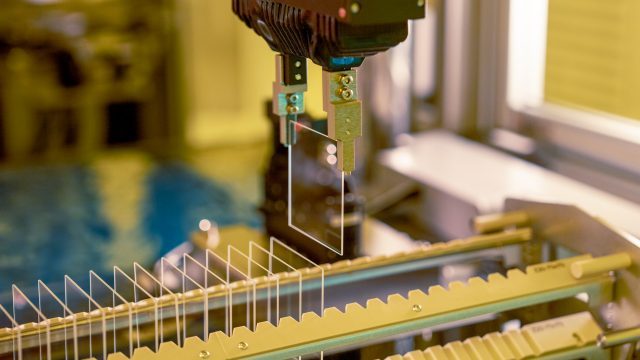

And the work is fiddly, but iFixit manages to go all the way down to the dual speakers, motherboard, Snapdragon AR1 chipset, and liquid crystal on silicon (LCoS) light engine, the latter of which was captured with a CT scanner to show off just how micro Meta has managed to get its most delicate part.

Granted, this is a teardown and not a repair guide as such. All of the components are custom, and replacement parts aren’t available yet. You would also need a few specialized tools and an appetite for risk of destroying a pretty hard-to-come-by device.

For more, make sure to check out iFixit’s full article, which includes images and detailed info on each component. You can also see the teardown in action in the full nine-minute video below.

My Take

Meta isn’t really thinking deeply about reparability when it comes to smart glasses right now, which isn’t exactly shocking. Like earbuds, smart glasses are all about miniaturization to hit an all-day wearable form factor, making its plastic and glue-coated exterior a pretty clear necessity in the near term.

Another big factor: the company is probably banking on the fact that prosumers willing to shell out $800 bucks this year will likely be happy to so the same when Gen 2 eventually arrives. That could be in two years, but I’m betting less if the device performs well enough in the market. After all, Meta sold Quest 2 in 2020 just one year after releasing the original Quest, so I don’t see why they wouldn’t do the same here.

That said, I don’t think we’ll see any real degree of reparability in smart glasses until we get to the sort of sales volumes currently seen in smartphones. And that’s just for a baseline of readily available replacement parts, third-party or otherwise.

So while I definitely want a pair of smart glasses (and eventually AR glasses) that look indistinguishable from standard frames, that also kind of means I have to be okay with eventually throwing away a perfectly cromulent pair of specs just because I don’t have the courage to open it up, or know anyone who does.