Snap, the company behind Snapchat, today announced it’s working on the next iteration of its Spectacles AR glasses (aka ‘Specs’), which are slated to release publicly sometime next year.

Snap first released its fifth generation of Specs (Spectacles ’24) exclusively to developers in late 2024, later opening up sales to students and teachers in January 2025 through an educational discount program.

Today, at the AWE 2025, Snap announced it’s launching an updated version of the AR glasses for public release next year, which Snap co-founder and CEO Evan Spiegel teases will be “a much smaller form factor, at a fraction of the weight, with a ton more capability.”

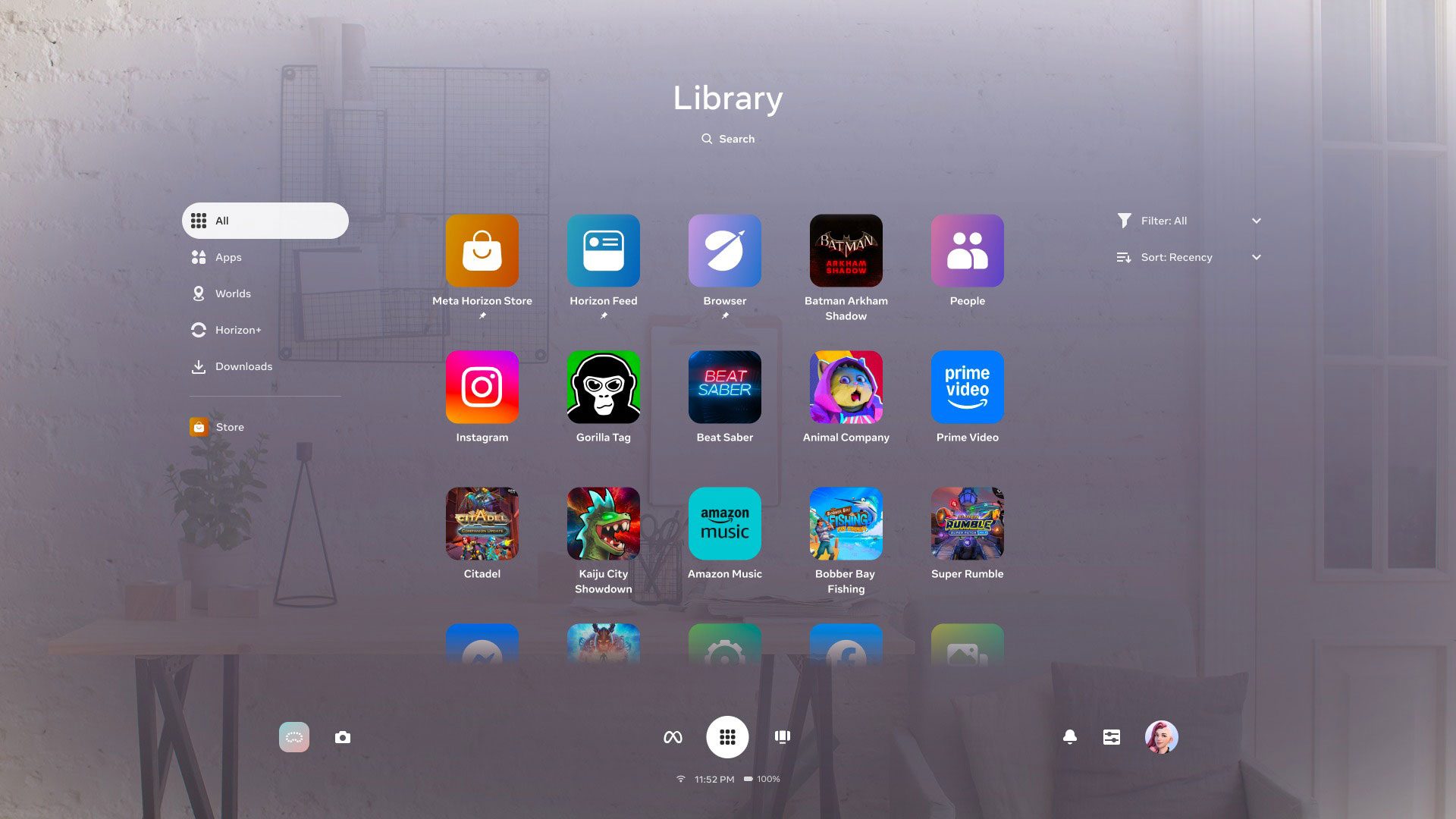

There’s no pricing or availability yet beyond the 2026 launch window. To boot, we haven’t even seen the device in question, although we’re betting they aren’t as chunky as these:

Spiegel additionally noted that its four million-strong library of Lenses, which add 3D effects, objects, characters, and transformations in AR, will be compatible with the forthcoming version of Specs.

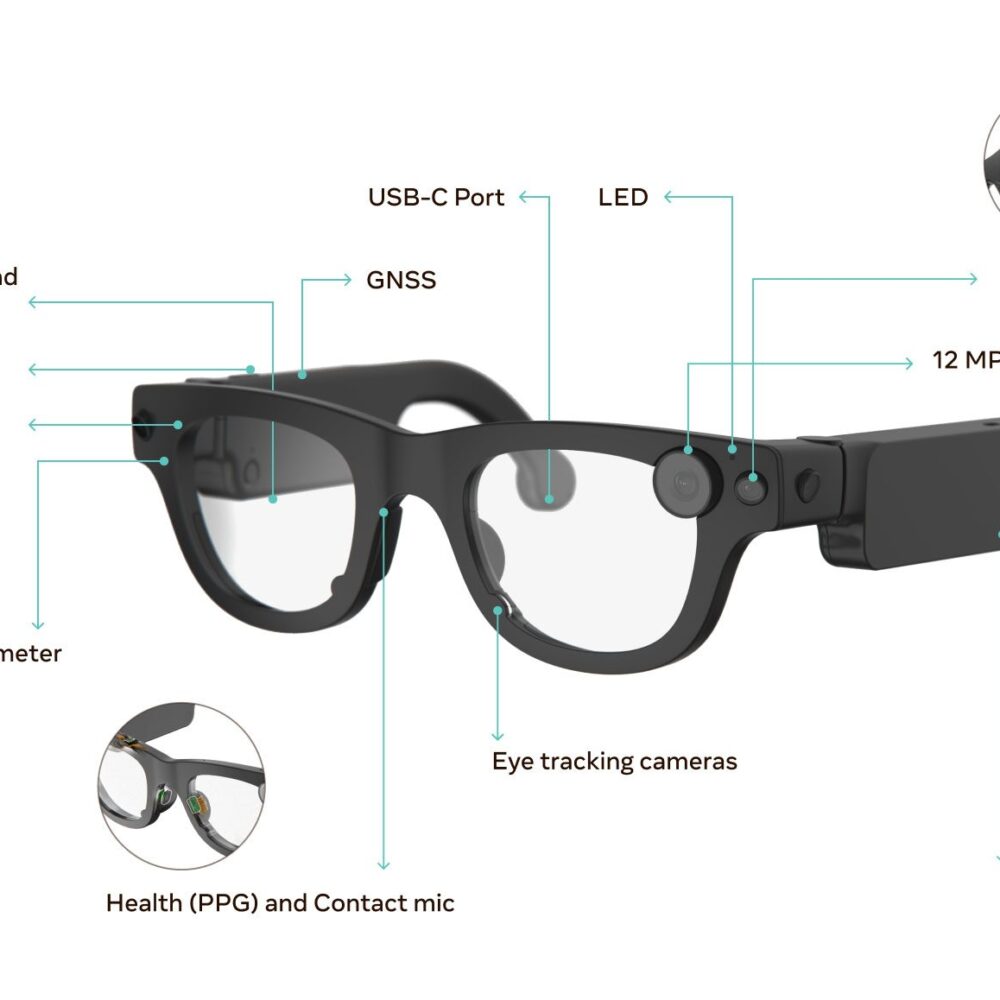

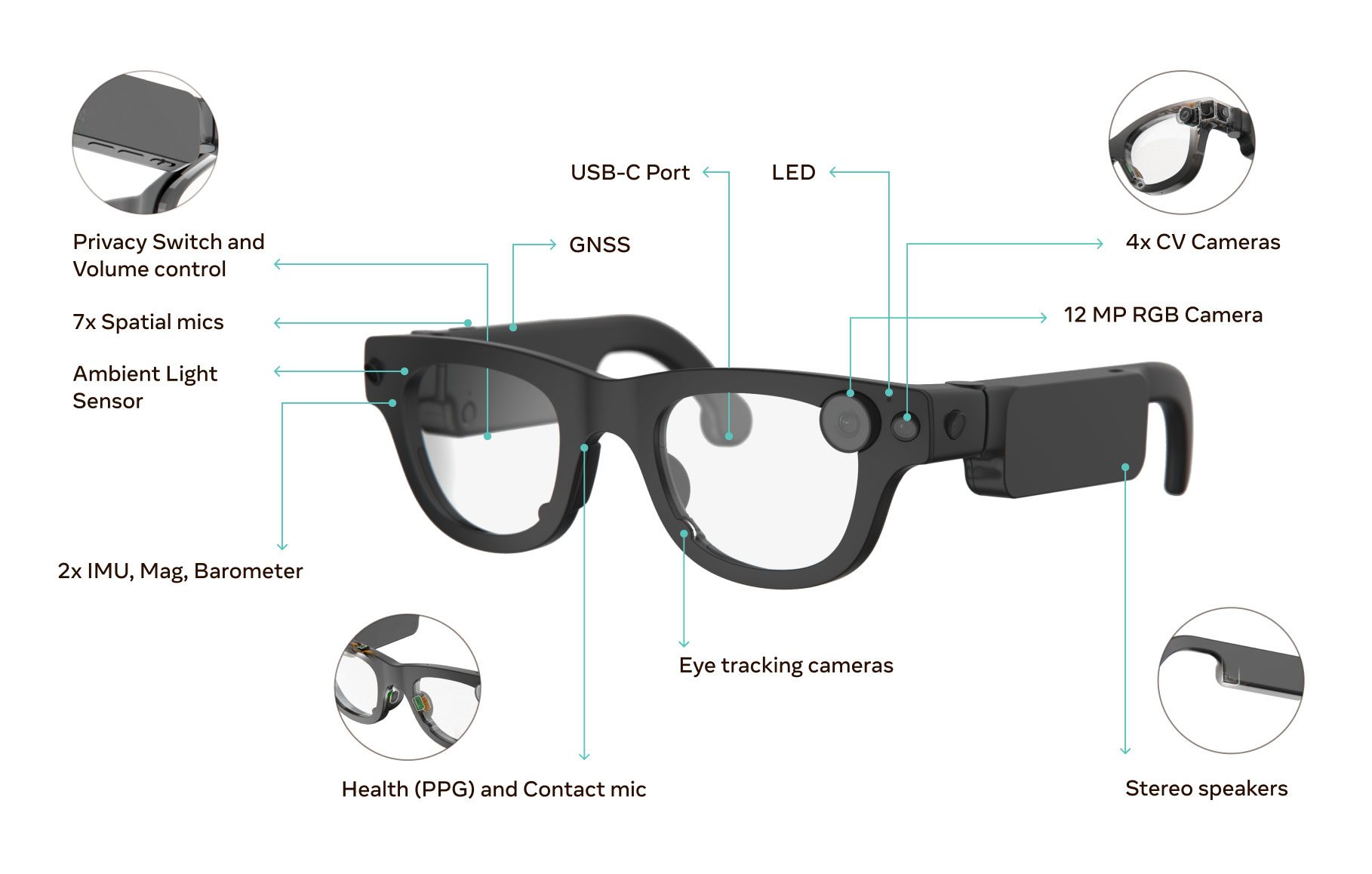

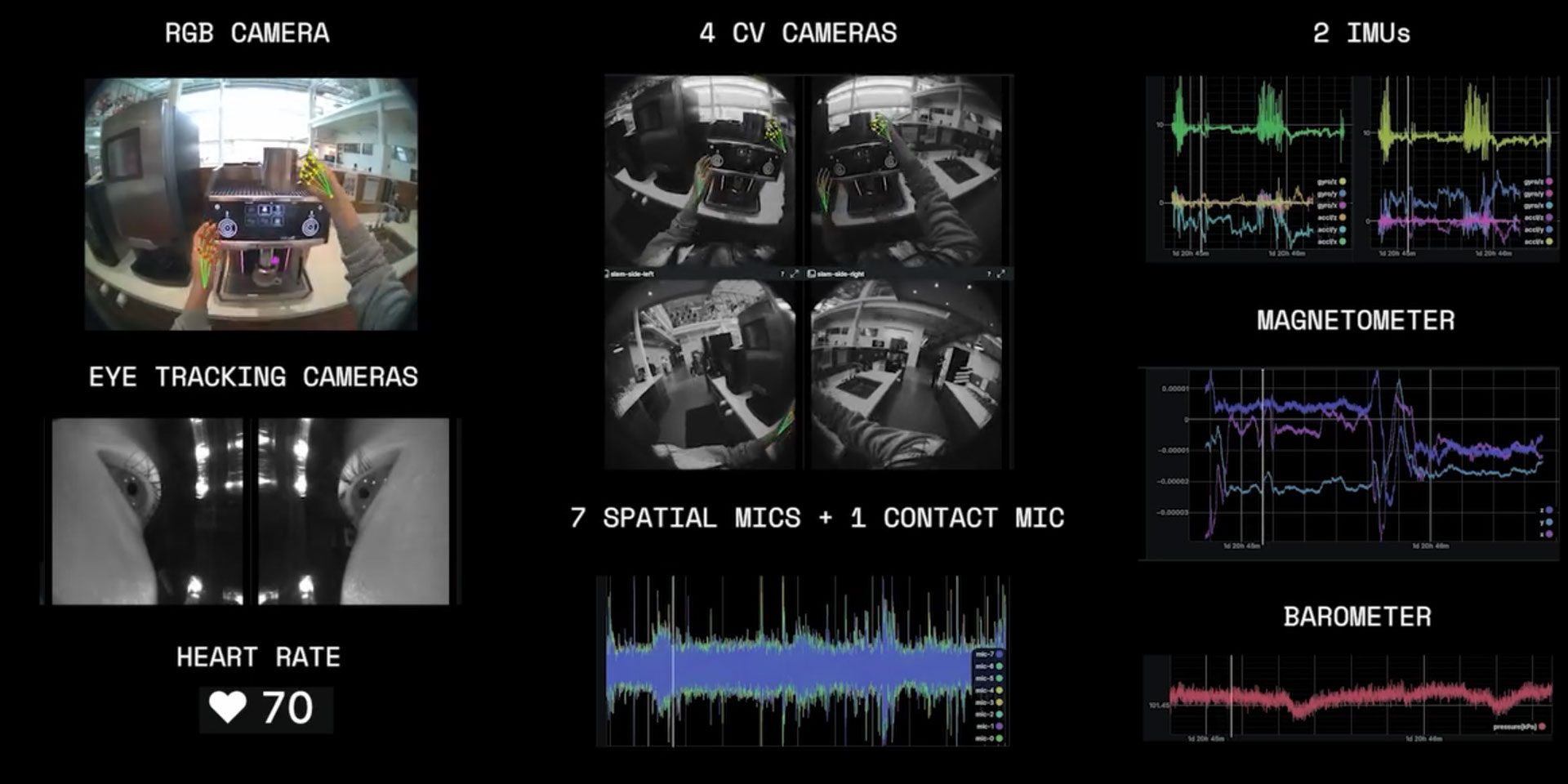

While the company isn’t talking specs (pun intended) right now, the version introduced in 2024 packs in a 46° field of view via stereo waveguide displays, which include automatic tint, and dual liquid crystal on silicon (LCoS) miniature projectors boasting 37 pixels per degree.

As a standalone unit, the device features dual Snapdragon processors, stereo speakers for spatial audio, six microphones for voice recognition, as well as two high-resolution color cameras and two infrared computer vision cameras for 6DOF spatial awareness and hand tracking.

There’s no telling how these specs will change on the next version, although we’re certainly hoping for more than the original’s 45-minute battery life.

And as the company is gearing up to release its first publicly available AR glasses, Snap also announced major updates coming to Snap OS. Key enhancements include new integrations with OpenAI and Google Cloud’s Gemini, allowing developers to create multimodal AI-powered Lenses for Specs. These include things like real-time translation, currency conversion, recipe suggestions, and interactive adventures.

Additionally, new APIs are said to expand spatial and audio capabilities, including Depth Module API, which anchors AR content in 3D space, and Automated Speech Recognition API, which supports 40+ languages. The company’s Snap3D API is also said to enable real-time 3D object generation within Lenses.

For developers building location-based experiences, Snap says it’s also introducing a Fleet Management app, Guided Mode for seamless Lens launching, and Guided Navigation for AR tours. Upcoming features include Niantic Spatial VPS integration and WebXR browser support, enabling a shared, AI-assisted map of the world and expanded access to WebXR content.

Releasing Specs to consumers could put Snap in a unique position as a first mover; companies including Apple, Meta, and Google still haven’t released their own AR glasses, although consumers should expect the race to heat up this decade. The overall consensus is these companies are looking to own a significant piece of AR, as many hope the device class will unseat smartphones as the dominant computing paradigm in the future.