Scott Myers, Snap’s top executive in charge of Specs, has left the company ahead of the planned release of its consumer AR glasses.

The News

Myers reportedly left his six-year tenure at the company due to a dispute with Snap CEO Evan Spiegel, tech outlet Sources claims, characterizing the dispute as a “blow-up” centered around the company’s strategy.

A Snap spokesperson confirmed Myers’ departure on Reddit, nothing that Specs are still on track for release this year:

“Scott Myers has decided to step down from his role at Snap. We thank him for his contributions and wish him the best in his next chapter. We can’t wait to bring Specs to the world later this year. We remain focused on disciplined execution and long term value creation for our developer partners, community and shareholders.”

Myers came to Snap in 2020 to oversee all aspects of Specs, including hardware, software, product and operations. He previously held senior positions at SpaceX, Apple, and Nokia, according to his LinkedIn profile.

This comes at a critical moment for Snap. In September 2025, Spiegel noted in an open letter that the company is heading into a make-or-break “crucible moment” in 2026, positioning Specs are an integral part of the company’s future.

“This moment isn’t just about survival. It’s about proving that a different way of building technology, one that deepens friendships and inspires creativity, can succeed in a world that often rewards the opposite,” Spiegel said.

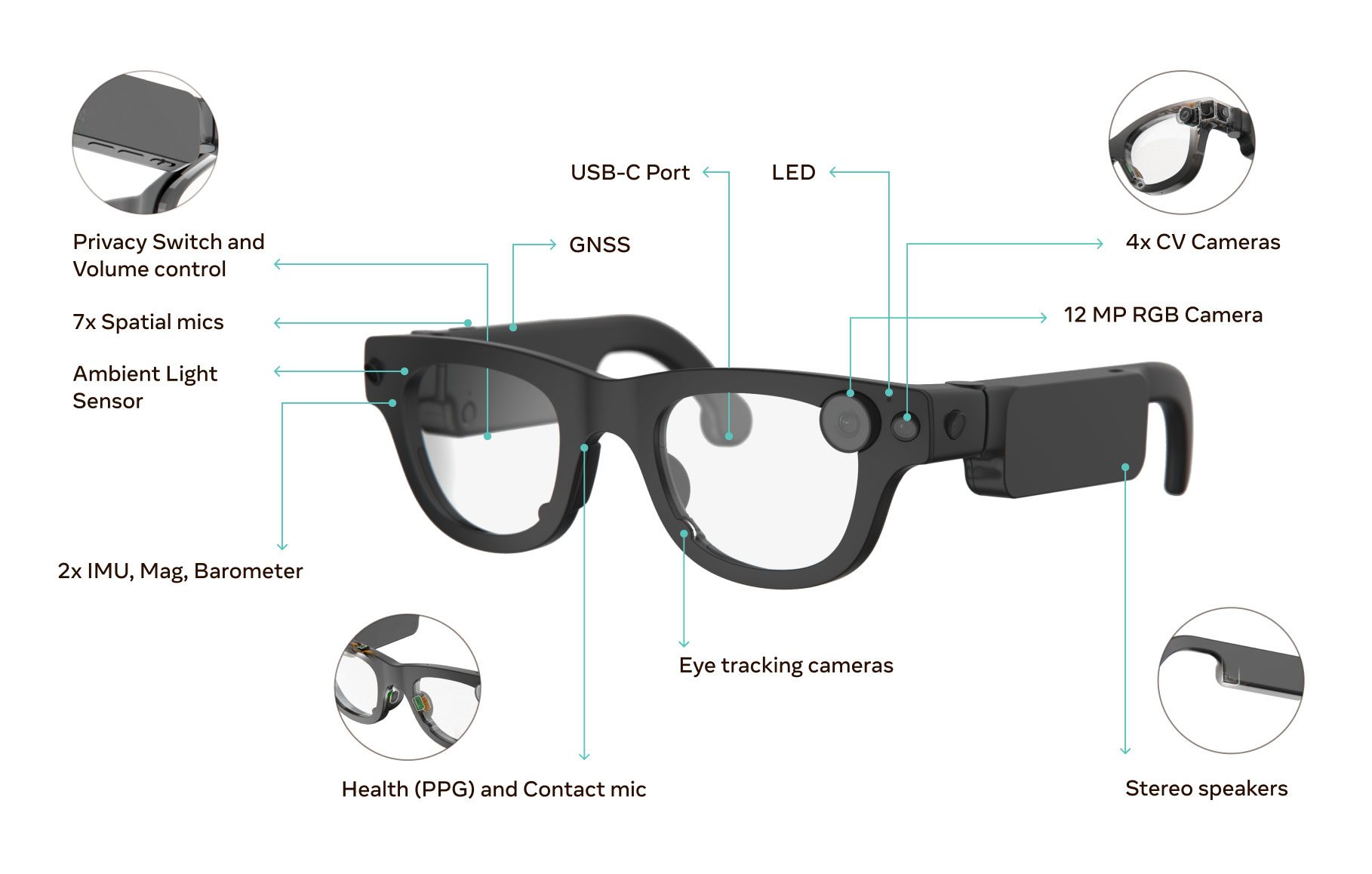

The consumer version of Specs is set to be the company’s sixth generation glasses following the release of its fifth-gen hardware in 2024. As ‘true’ AR glasses (re: not smart glasses like Meta Ray-Ban Display), the device is ostensibly set to frontrun some of Snap’s largest competitors.

My Take

It’s uncertain why Myers left Snap; the company even disputed the “blow-up” narrative with TechCrunch, providing no other reasoning, which makes Myers’ departure an even greater mystery—especially on the eve of the company’s big consumer AR glasses launch.

Speculatively speaking, there is at least one recent sign that could point to trouble brewing in the background. Myer’s departure follows a recent move by the company to form a wholly-owned subsidiary dedicated to Specs.

Snap says the so-called ‘Specs Inc’ subsidiary will primarily allow for “new partnerships and capital flexibility,” including the potential for minority investment. More concretely, Specs Inc also insolates Snap from any potential failure.

Whether that betrays a lack of confidence is unclear, although the top executive who oversaw the release of the fourth and fifth-gen versions—notably the only two with displays and AR capabilities—doesn’t smack of confidence.