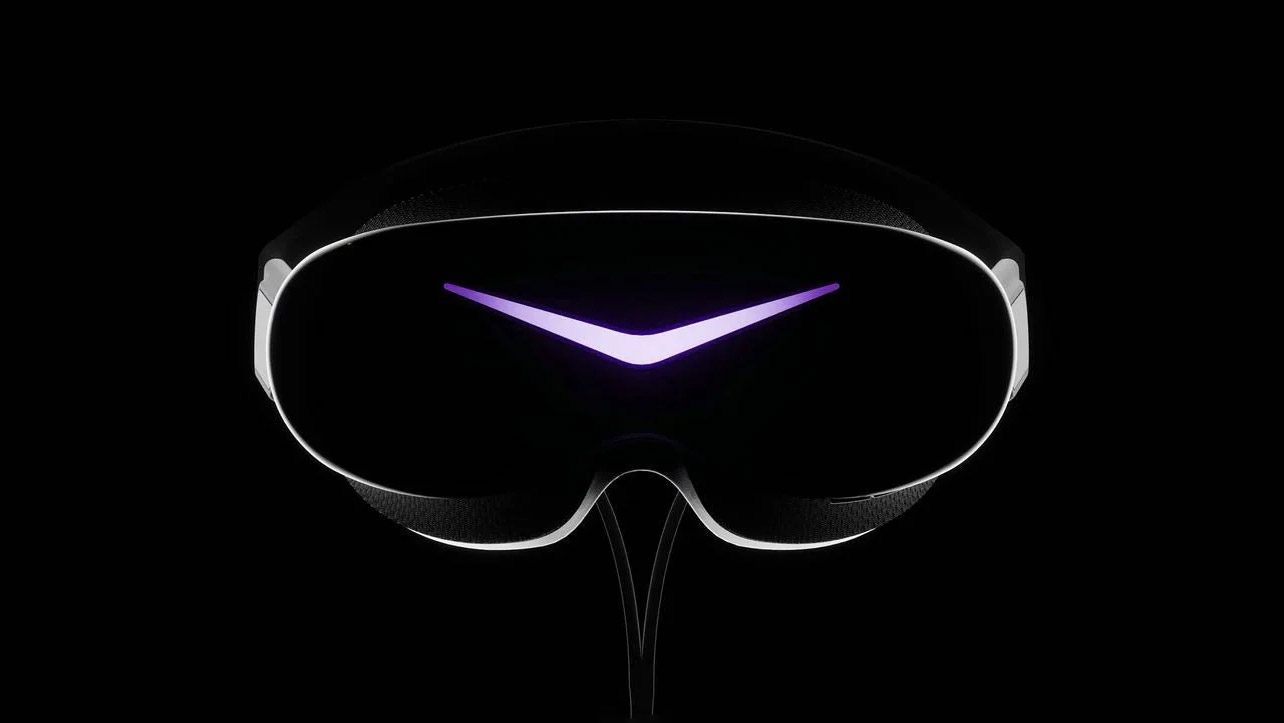

Project Moohan was a no-show at Samsung’s Galaxy product event yesterday, giving the company even fewer opportunities to announce and release the Vision Pro competitor by year’s end.

Samsung confirmed in early August that Project Moohan is coming before the end of the year, although we still don’t know its name, release date, or price.

At its recent Galaxy event, the company unveiled a number of mobile devices, including Galaxy S25 FE, Galaxy Tab S11, and Galaxy Tab S11 Ultra. Just not Project Moohan.

A recent report from Korea’s Newsworks maintained Moohan would be featured at Samsung Unpacked, which is rumored to take place on September 29th. Still, that’s awfully close to the Galaxy product event, which took place yesterday.

The Newswork report maintains Project Moohan—which means ‘Infinite’ in Korean—is rumored to launch first in South Korea on October 13th, priced at somewhere between ₩2.5 and ₩4 million South Korean won, or between $1,800 and $2,900 USD.

Still, it’s fairly odd that Samsung hasn’t taken more of an opportunity to hype the mixed reality headset, which will be the first to run Google’s Android XR operating system.

Samsung has essentially kept the lid fairly tight on Moohan since it was announced in December 2024; it was often available for demo behind closed doors at events like MWC in March and Google I/O in June, although it was conspicuously absent from Unpacked in January and the second Unpacked in July… and now the Galaxy product event.

With claims of launching in South Korea first, and at around $2,000, it could be that Samsung is expecting fairly low sales volumes from the standalone MR headset, which admittedly has some fairly good specs under the hood.

Here’s the short of it: Moohan packs in a Qualcomm Snapdragon XR2 + Gen 2, dual micro‑OLED panels (resolution specs yet), pancake lenses, automatic interpupillary distance (IPD) adjustment, support for eye and hand-tracking, optional magnetically-attached light shield, and a removable external battery pack. It’s also getting VR motion controllers of some sort, but we still haven’t seen those either.

You can learn more by checking out our hands-on with Project Moohan from December 2024, which includes everything from comfort, display clarity, and how Android XR looks a lot like Horizon OS combined with VisionOS.