Researchers at Meta Reality Labs and Stanford University have unveiled a new holographic display that could deliver virtual and mixed reality experiences in a form factor the size of standard glasses.

In a paper published in Nature Photonics, Stanford electrical engineering professor Gordon Wetzstein and colleagues from Meta and Stanford outline a prototype device that combines ultra-thin custom waveguide holography with AI-driven algorithms to render highly realistic 3D visuals.

Although based on waveguides, the device’s optics aren’t transparent like you might find on HoloLens 2 or Magic Leap One though—the reason why it’s referred to as a mixed reality display and not augmented reality.

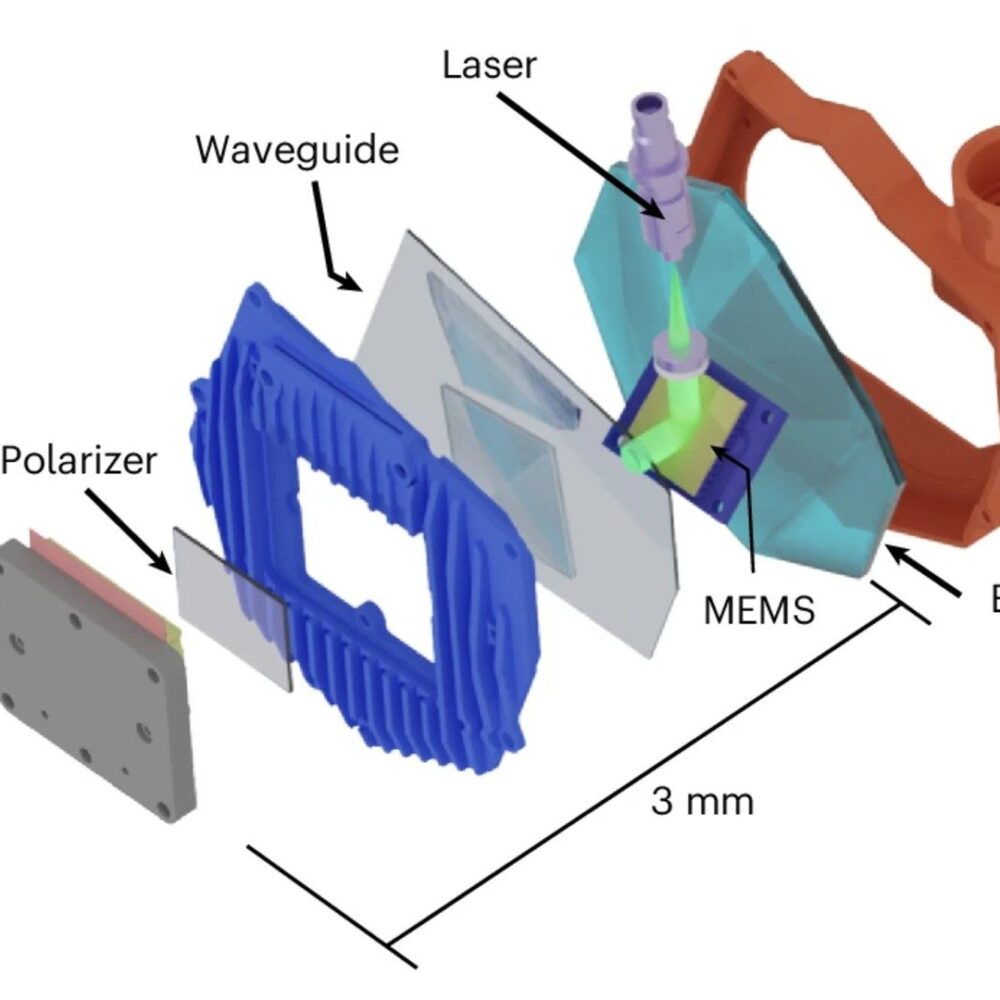

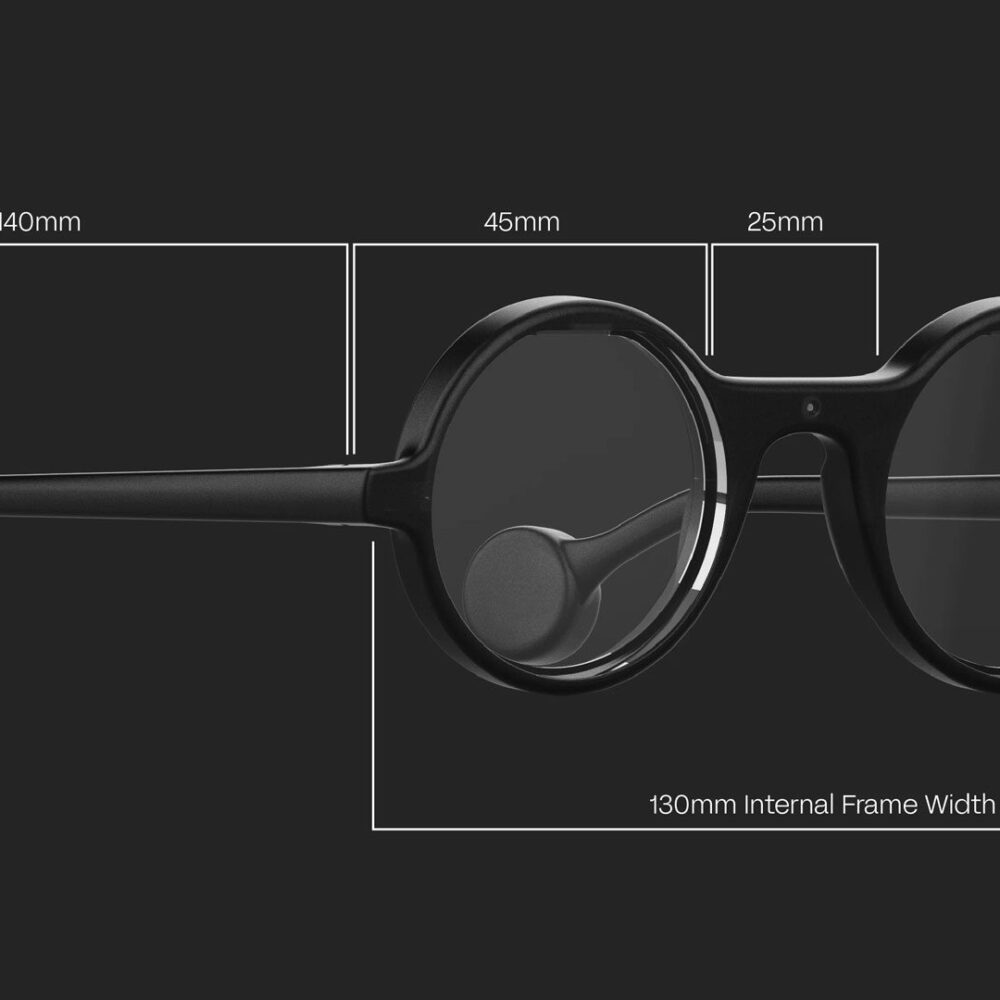

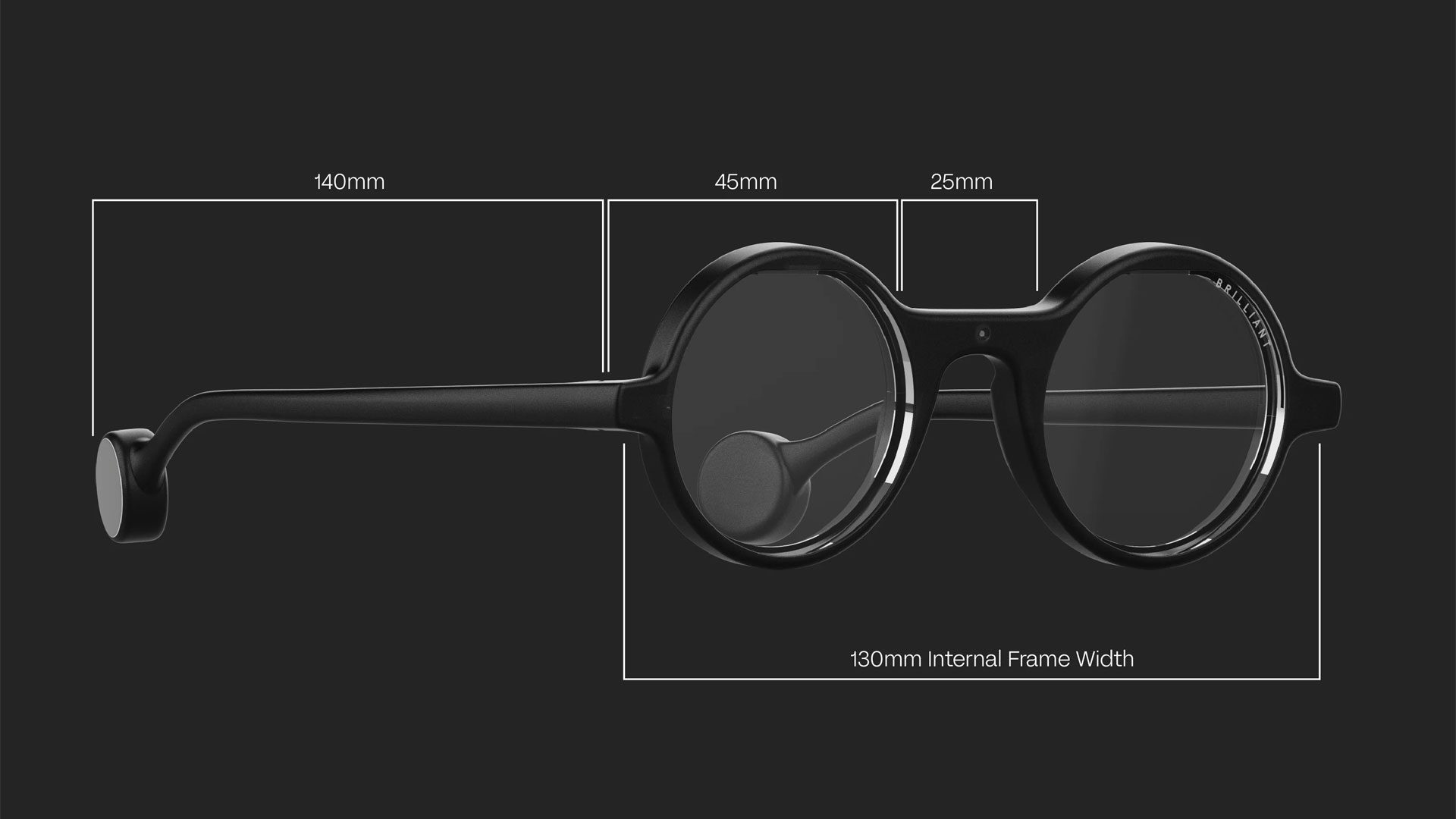

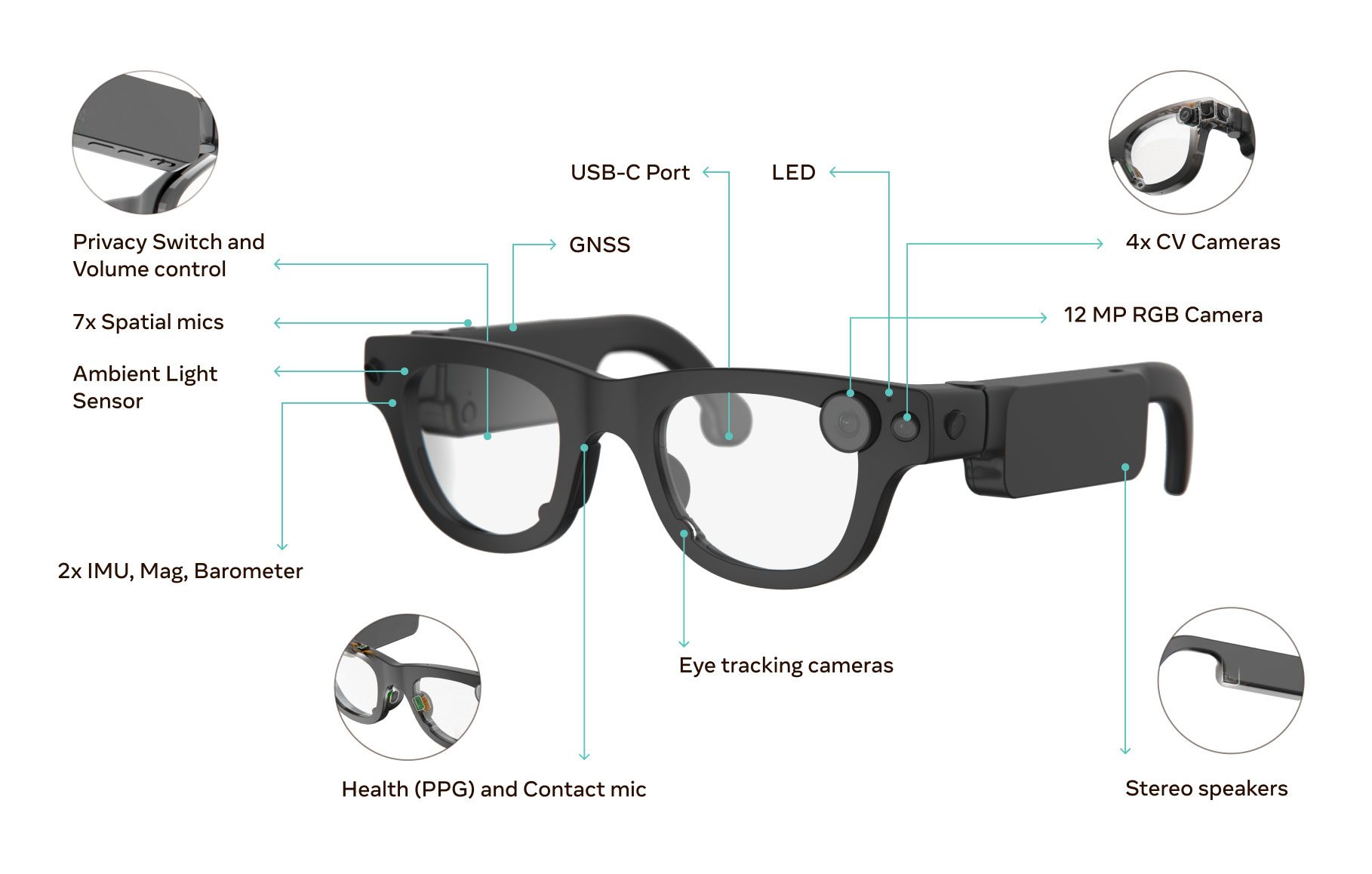

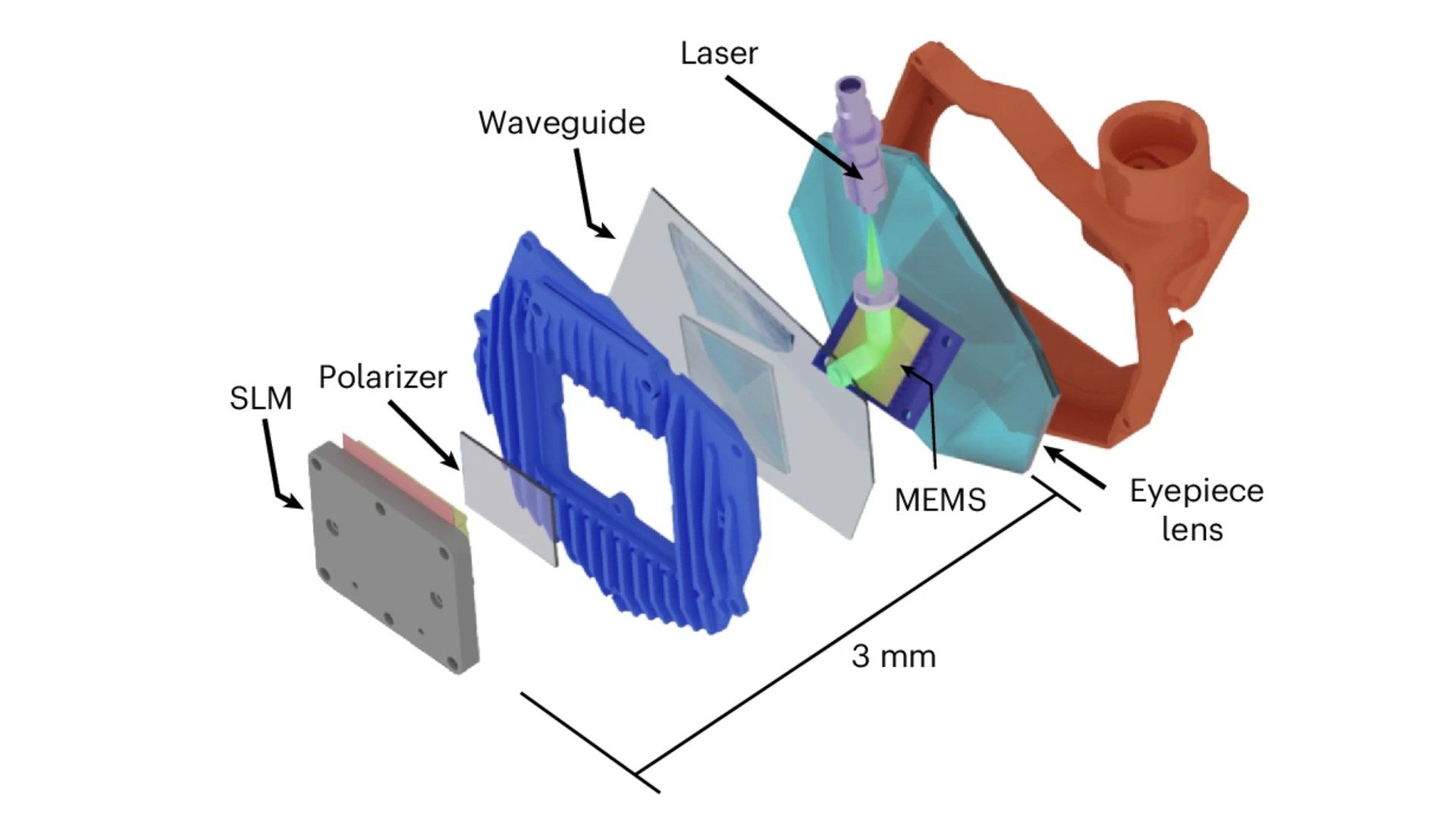

At just 3 millimeters thick, its optical stack integrates a custom-designed waveguide and a Spatial Light Modulator (SLM), which modulates light on a pixel-by-pixel basis to create “full-resolution holographic light field rendering” projected to the eye.

Unlike traditional XR headsets that simulate depth using flat stereoscopic images, this system produces true holograms by reconstructing the full light field, resulting in more realistic and naturally viewable 3D visuals.

“Holography offers capabilities we can’t get with any other type of display in a package that is much smaller than anything on the market today,” Wetzstein tells Stanford Report.”

The idea is also to deliver realistic, immersive 3D visuals not only across a wide field-of-view (FOV), but also a wide eyebox—allowing you to move your eye relative to the glasses without losing focus or image quality, or one of the “keys to the realism and immersion of the system,” Wetzstein says.

The reason we haven’t seen digital holographic displays in headsets up until now is due to the “limited space–bandwidth product, or étendue, offered by current spatial light modulators (SLMs),” the team says.

In practice, a small étendue fundamentally limits how large of a field of view and range of possible pupil positions, that is, eyebox, can be achieved simultaneously.

While the field of view is crucial for providing a visually effective and immersive experience, the eyebox size is important to make this technology accessible to a diversity of users, covering a wide range of facial anatomies as well as making the visual experience robust to eye movement and device slippage on the user’s head.

The project is considered the second in an ongoing trilogy. Last year, Wetzstein’s lab introduced the enabling waveguide. This year, they’ve built a functioning prototype. The final stage—a commercial product—may still be years away, but Wetzstein is optimistic.

The team describes it as a “significant step” toward passing what many in the field refer to as a “Visual Turing Test”—essentially the ability to no longer “distinguish between a physical, real thing as seen through the glasses and a digitally created image being projected on the display surface,” Suyeon Choi said, the paper’s lead author.

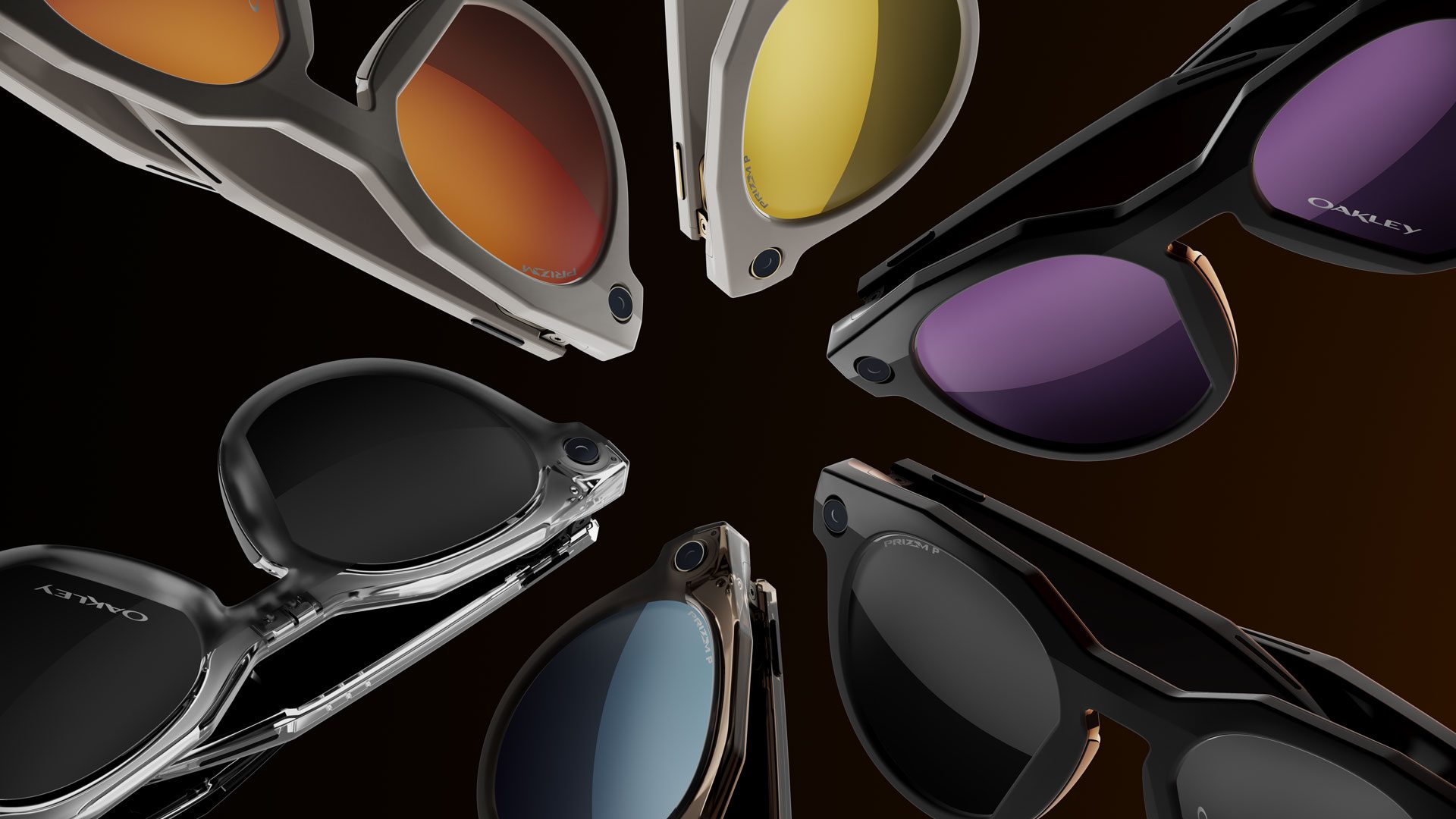

This follows a recent reveal from researchers at Meta’s Reality Labs featuring ultra-wide field-of-view VR & MR headsets that use novel optics to maintain a compact, goggles-style form factor. In comparison, these include “high-curvature reflective polarizers,” and not waveguides as such.