Apple’s Vice President of Human Interface design, Alan Dye, is leaving the company to lead a new studio within Meta’s Reality Labs division. The move appears to be aimed at raising the bar on the user experience of Meta’s glasses and headsets.

The News

According to his LinkedIn profile, Alan Dye spent nearly 20 years as Apple’s Vice President of Human Interface Design. He was a driving force behind the company’s UI and UX direction, including Apple’s most recent ‘Liquid Glass’ interface overhaul and the VisionOS interface that’s the foundation of Vision Pro.

Now Dye is heading to Meta to lead a “new creative studio within Reality Labs,” according to an announcement by Meta CEO Mark Zuckerberg.

“The new studio [led by Dye] will bring together design, fashion, and technology to define the next generation of our products and experiences. Our idea is to treat intelligence as a new design material and imagine what becomes possible when it is abundant, capable, and human-centered,” Zuckerberg said. “We plan to elevate design within Meta, and pull together a talented group with a combination of craft, creative vision, systems thinking, and deep experience building iconic products that bridge hardware and software.”

The new studio within Reality Labs will also include Billy Sorrentino, another high level Apple designer; Joshua To, who has led interface design at Reality Labs; Meta’s industrial design team, led by Pete Bristol; and art teams led by Jason Rubin, a longtime Meta executive that has been with the company since its 2014 acquisition of Oculus.

“We’re entering a new era where AI glasses and other devices will change how we connect with technology and each other. The potential is enormous, but what matters most is making these experiences feel natural and truly centered around people. With this new studio, we’re focused on making every interaction thoughtful, intuitive, and built to serve people,” said Zuckerberg.

My Take

I’ve been ranting about the fundamental issues of the Quest user experience and interface (UX & UI) for literally years at this point. Meta has largely hit it out of the park with its hardware design, but the software side of things has lagged far behind what we would expect from one of the world’s leading software companies. A post on X from less than a month ago sums up my thoughts:

It’s crazy to see Meta take one step forward with its Quest UI and two steps back, over and over again for years.

They keep piling on new features with seemingly no top-down vision for how the interface should work or feel. The Quest interface is as scattered, confusing, and unpolished as ever.

The new Navigator is an improvement for simply accessing app icons, but it feels like it’s using a completely different paradigm than the rest of the window / panel management interface. Not to mention that the system interface speaks a vastly different language than the Horizon interface.

I have completely lost faith that Meta will ever get a handle on this after watching the interface meander in random directions year after year, punctuated by “refreshes” that look promising but end up being forgotten about 6 months later.

It seems Meta is trying to course-correct before things get further out of hand. If pulling in one of the world’s most experienced individuals at creating cohesive UX & UI at scale is what it takes, then I’m glad to see it happening.

Apple has set a high bar for how easy a headset should be to use. I use both Vision Pro and Quest on a regular basis, and moving between them is a night-and-day difference in usability and polish. And as I’ve said before, the high cost of Vision Pro has little to do with why its interface works so much better; the high level design decisions—which would work similarly well on any headset—are a much more significant factor.

Back when Meta was still called Facebook, the company had a famous motto: “Move fast and break things.” Although the company no longer champions this motto, it seems like it has had a hard time leaving it behind. The scattered, unpolished, and constantly shifting nature of the Quest interface could hardly embody the motto more clearly.

“Move fast and break things” might have worked great in the world of web development, but when it comes to creating a completely new interface paradigm for the brand new medium of VR, it hasn’t worked so well.

Of course, Dye’s onboarding and the new studio within Reality Labs isn’t only about Quest. In fact, it might not even be mostly about Quest. If I’ve learned anything about Zuckerberg over the years, it’s that he’s a very long-term thinker and does what he can to move his company where it needs to go to be in the right place 5 or 10 years down the road.

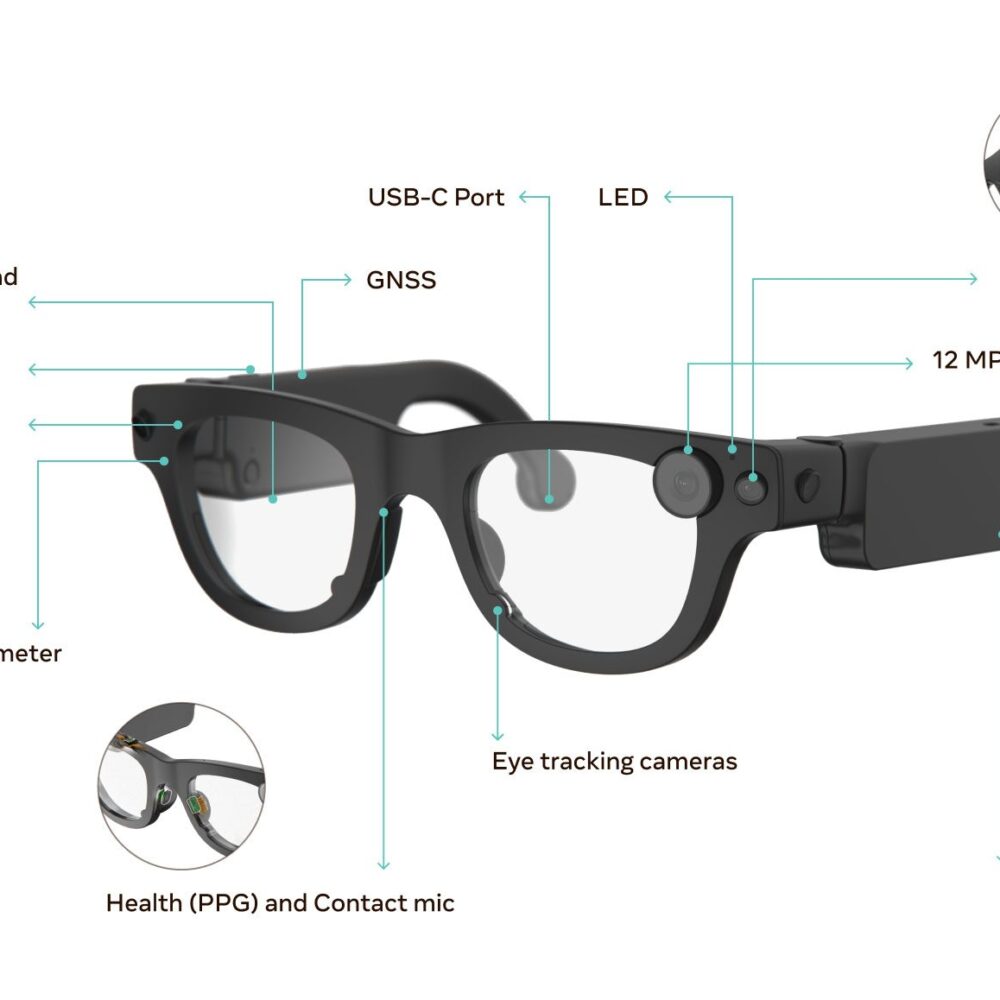

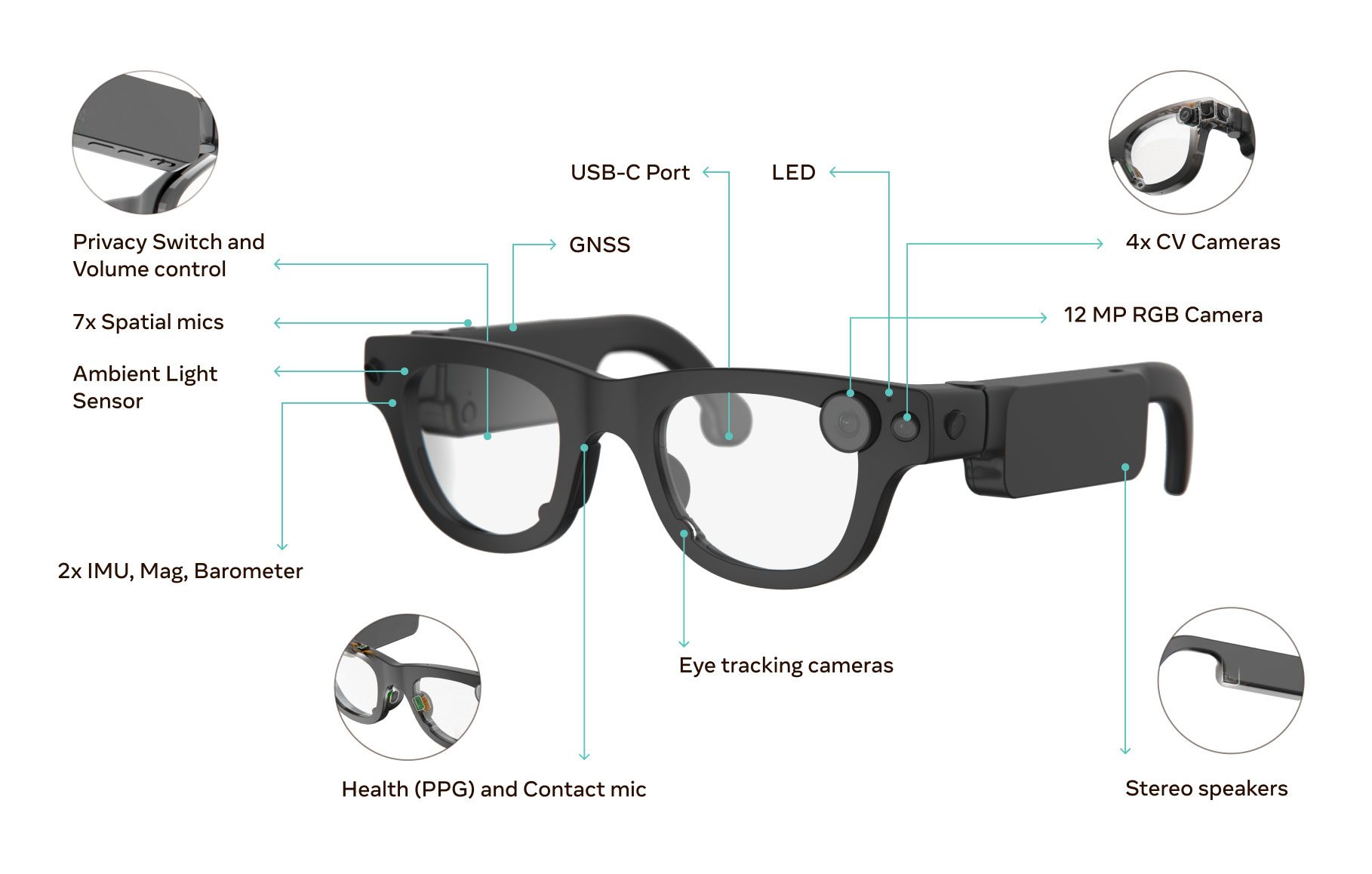

And in 5 to 10 years, Zuckerberg hopes Meta will be dominant, not just with immersive headsets, but AI smart glasses (and likely unreleased devices) too. This new team will likely not be focused on fixing the current state of the Quest interface, but instead trying to define a cohesive UX & UI for the company’s entire ecosystem of devices.

With Alan Dye heading to Meta, there’s a good chance that he will bring with him decades of Apple design processes that have worked well for the company over many years. But I have a feeling it will be a significant challenge for him to change “move fast and break things” to “move slow and polish things” within Meta.